In this second part of the comparison between Google Cloud Run and AWS Lambda, we put the two to the test with a series of benchmarks focusing on raw performance, latencies, and the performance/cost ratio of both products. With these results, you’ll be better informed and more capable of deciding which solution is best for your particular use case(s).

If you haven’t yet read Part 1 and are interested in learning how these products differ in terms of their features, I highly recommend doing so.

The code for this post, along with the resulting dataset for all benchmarks, is open-source and available on GitHub.

CPU Performance

For the CPU performance benchmark, I used the sysbench CPU test. It is a simple prime-number test, which reports the number of events per second, where every single event calculates all prime numbers up to 10,000.

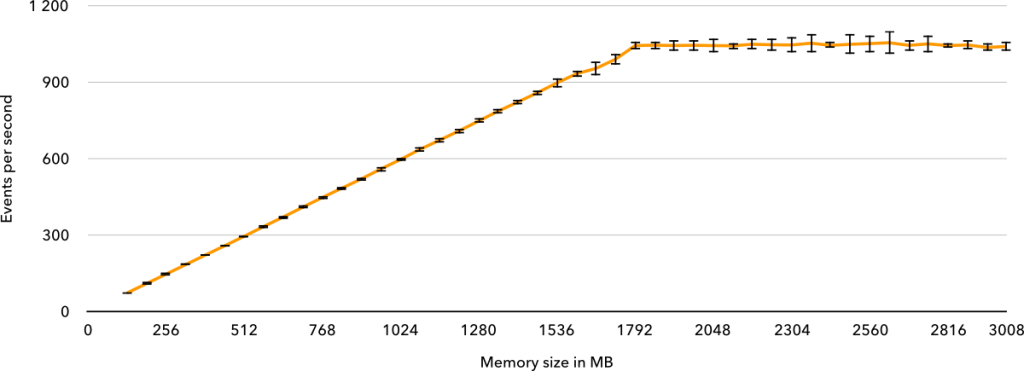

Single-Threaded Lambda

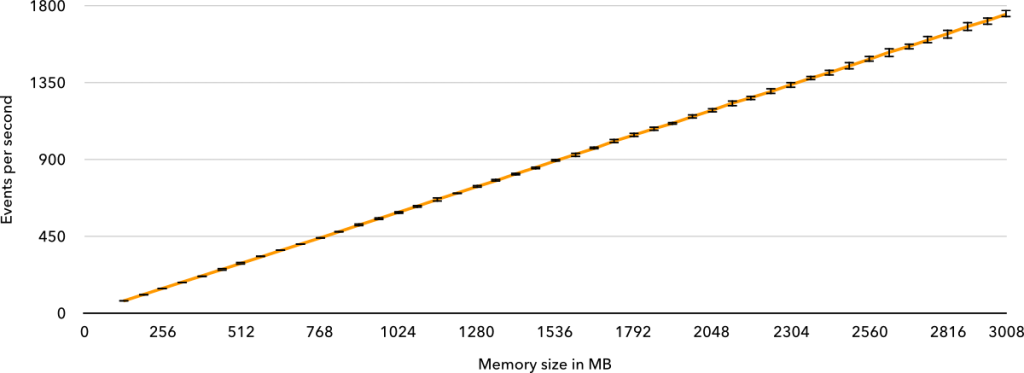

We know that Lambda CPU performance scales with provisioned memory, but does that hold until its current limit of 3,008 MB or does it plateau at some point? What about multi-threaded performance? Let’s find out!

For each test run, 100 calls in parallel were made to each service to provide a decent sample size. These were then repeated for different configurations. For Lambda, that meant 100 invocations for every memory configuration available (all 46 of them!), while Cloud Run only required two configurations for the different number of vCPUs.

AWS Lambda peaks at an average of 1,047 events per second at the 1,792 MB memory size. Between 128 MB and 1,792 MB, the events per second per MB remains steady at an average (and median) of around 0.58, with a standard deviation of just 0.0095. The error bars in all charts represent the standard deviation.

Because the Lambda pricing is based on gigabytes of memory per second, we can confirm that single-threaded CPU-bound tasks in Lambda end up costing the same regardless of the chosen memory size up to 1,792 MB. After this, CPU-bound code that doesn’t need the extra memory becomes more expensive to run, as Lambda no longer offers any additional single-threaded CPU performance.

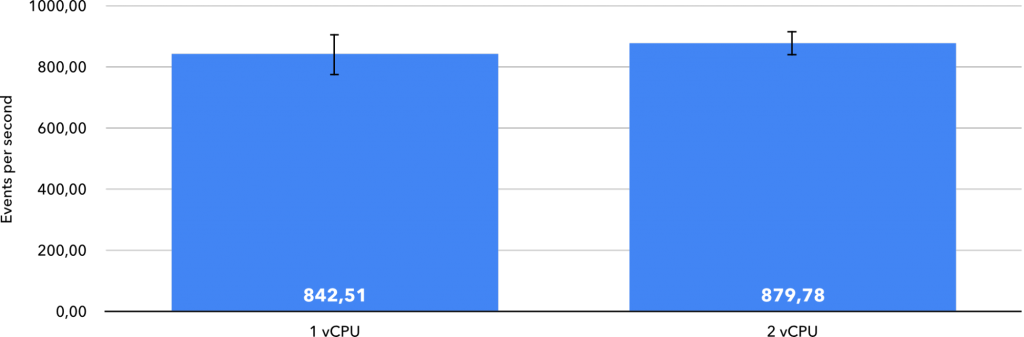

Single-Threaded Cloud Run

With Cloud Run, there are a lot more fluctuations in the results. The chart below shows the average events per second, which have surprisingly high standard deviations of 68.83 and 41.66, for 1 and 2 vCPUs, respectively. As a comparison, the closest Lambda in terms of CPU performance here is at 1,472 MB of memory, which had a standard deviation of only 9.64. With such high variability, I wouldn’t conclude that the number of vCPUs affects single-thread performance, as they fall into each other’s margin of error.

Two things are clear: AWS Lambda offers better single-threaded CPU performance than Cloud Run, and that performance is more predictable between runs. On average, Lambda is about 24% faster in single-threaded performance when compared against Cloud Run (1 vCPU).

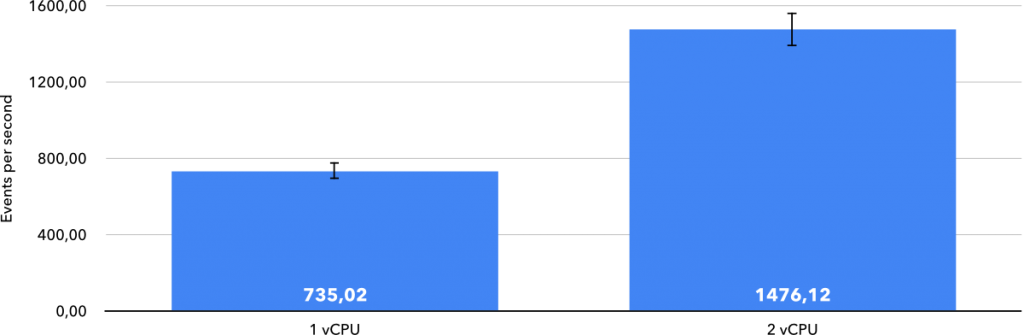

Multi-Threaded

With eight threads, it’s good to see that Lambda keeps linear performance growth to its current limit of 3,008 MB, where it hits an average of 1,752 events per second. Compared to the single-threaded benchmark, it manages to maintain an average and median of nearly 0.58, but at an even lower standard deviation of 0.0082. It’s remarkable to see such little variability in performance between executions. While it’s undocumented, this test proves that after about 1,792 MB of allocated memory, there is an increase in the number of virtuals CPUs or threads per CPU.

With Cloud Run, as expected, the number of events per second doubles with the addition of the second vCPU. However, there’s a 12.76% drop in single vCPU performance when moving from one to eight threads, while Lambda had a decrease in performance of only 0.66%. There is an expectation for a slight decrease with the higher thread count, but Cloud Run’s decrease in performance seems extreme.

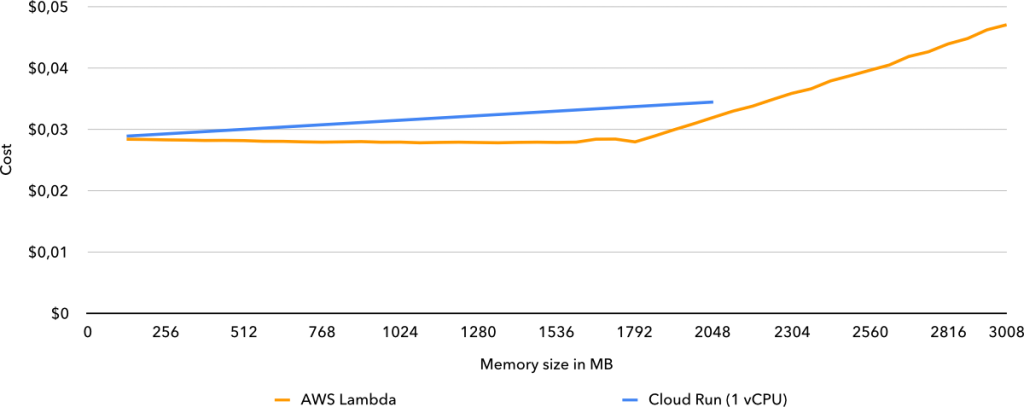

Cost

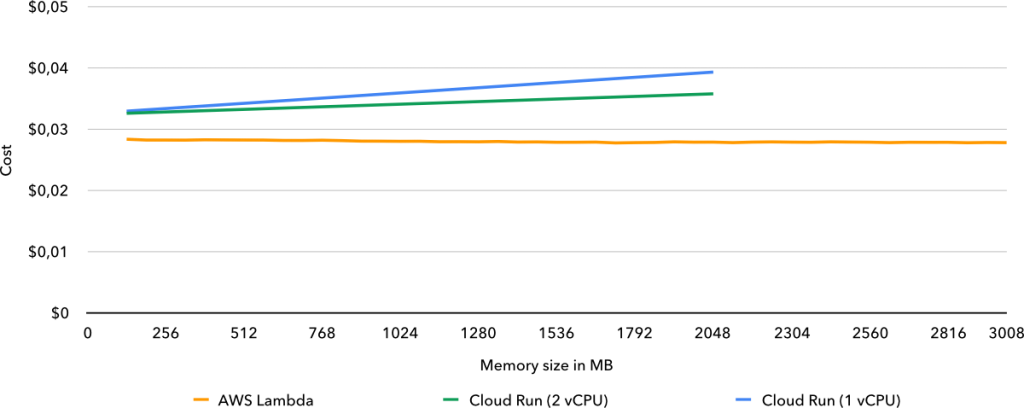

To compare the cost between both products for completely CPU-bound tasks, I plotted how much it would cost to run one million events on each service and configuration. The actual number of events could be any arbitrary number; essentially, the goal is to see the cost difference for the exact same job.

For purely CPU-bound tasks, Lambda came out on top as always being the cheapest option, with Cloud Run being anywhere between 1.8% and 28.2% more expensive, depending on the required memory. If Cloud Run supported more than 2 GB of memory at its current price and more than some 2.2 GB of memory were required, it would have been cheaper in the single-threaded benchmark.

Comparing to VMs

For a little bit of perspective, I thought it would be interesting to see how these numbers compare to VMs in both clouds. In the single-thread benchmark, a Google Compute Engine N1 instance scored 939.14 events per second, while an Amazon EC2 C5n instance scored 1,155.87 events per second, both using the Intel Skylake architecture. These serverless offerings do not stand very far from the VMs of their respective clouds in terms of single-thread CPU performance.

Unfortunately, I was not able to compare them with GCP’s latest N2/C2 instances, as these appear to be in such high demand that even my quota request for a single instance was rejected.

Cold Starts and Latencies

Cold starts have always been one of Lambda’s major limitations, but they are inevitable in platforms that not only scale to zero but also scale up automatically. And the cold start of a function or container is still much lower than that of a virtual machine, which is sometimes easy to forget. But let’s see how they compare between Lambda and Cloud Run.

For this benchmark, 200 calls were made to each service in batches of 10 parallel calls, with an environment variable change between each batch to trigger a cold start. The same was then performed but without the environment variable change to simulate warm calls. The response times were recorded, and their median used to calculate the difference. These tests were performed from VMs running in the same cloud and region as the Lambda function and Cloud Run service being examined to minimize any external factors.

For both services, the code was written in Go and did nothing but sleep for five seconds. The purpose of the sleep call was to ensure that no instance would be reused during the parallel calls, giving us more reliable results.

Lambda’s cold start time added 210 milliseconds to the response time, while Cloud Run’s cold start added a whopping 1,090 milliseconds. It appears that Lambda’s cold starts are currently 5.2x faster than Cloud Run’s.

VPC

This story, however, is a bit different when a VPC is involved. The Lambda cold start time when configured to access resources in a VPC was 404 milliseconds, whereas Cloud Run’s was 1,232 milliseconds. I find this result particularly interesting due to how they are implemented. Lambda’s VPC access is made possible through an Elastic Network Interface that the Lambda functions attach to, which is where the extra latency originates. Cloud Run, on the other hand, works by having a separate VPC connector running, which not only incurs additional (albeit small) charges but also requires provisioning throughput. Because the Cloud Run VPC connector is a separate component, I did not expect the cold start time to differ at all, whether a VPC was used or not. I hope Google can remove this difference, first because the cold start is already much higher than Lambda’s, but also because it increases the cost.

Latency

Using these same results, we can also analyze the latency of warm calls by comparing the difference between the time the code runs for (5,000 milliseconds) against the time between the request and response. The median Lambda latency was 51 milliseconds, while Cloud Run was only 32 milliseconds. Thus the observed Cloud Run latency was about 37% lower than Lambda from a VM in the same region.

Cloud Run Concurrency

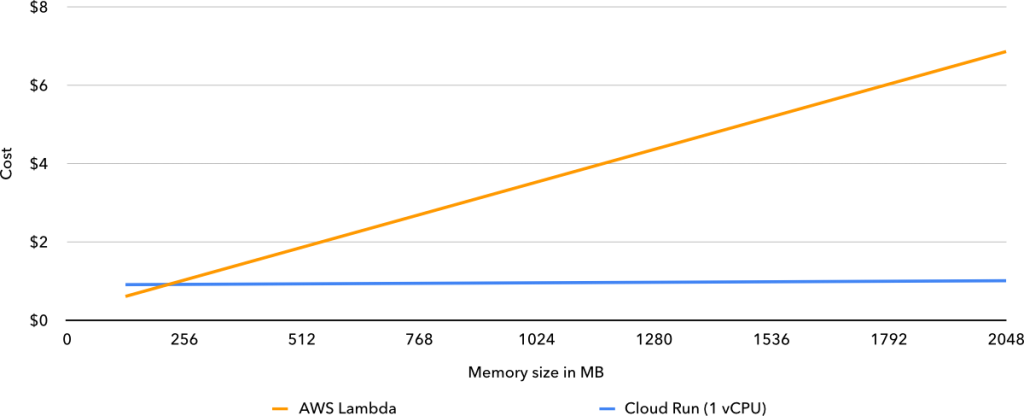

Finally, we get to test the one feature where Cloud Run may take the lead. The hypothesis here is that for I/O-bound workloads, Cloud Run may be significantly cheaper than Lambda when there are concurrent requests, as a single container can handle up to 80 requests concurrently.

Because we’re testing for an I/O-bound scenario, I made one million requests to a Cloud Run service that sleeps for 200 milliseconds, with a concurrency level of 200. In the best-case scenario, this would result in being charged for just three container instances, which would be able to handle the entirety of the 200 concurrent requests. The reality, however, is that I was billed for about 19 container instances for the duration of the benchmark (slightly over 18 minutes).

The exact way container instances get spawned (when and how) in Cloud Run is a black box and therefore a complete mystery, but I presume Google could further optimize it for cost. Even so, and as we can see from the chart below, Cloud Run can be over 6x cheaper than Lambda for I/O-bound concurrent workloads, depending on the allocated memory.

Event costs are also a factor, which are $0.20 for Lambda and $0.40 for Cloud Run. If you’d want to expose these as an HTTP service, with Lambda there would still be additional costs for either an API Gateway or Elastic Load Balancer, while nothing extra is required with Cloud Run.

What’s Next?

In the next and final part of this Google Cloud Run vs. AWS Lambda series, we’ll be incorporating these results into clear use-case recommendations for both services, suggestions for improvements, and personal opinions. In the meantime, check out these past IOD blog posts on AWS Lambda.

Note: The regions used for these benchmarks were us-east-1 for AWS and us-central1 for GCP. The duration pricing for Lambda used in calculations was $0.0000166667 for every GB-second, which is the most common price in the US and EU regions.