In over five years of service, AWS Lambda has spawned multiple attempts at cloning by most leading cloud providers (Google Cloud included). And while everyone else added some little twist to their offering, there hasn’t been much innovation in the serverless compute field since the original Lambda was introduced. The posts in this series aim to answer the question: Is Cloud Run finally a serverless game changer?

If you’re new to this series, I recommend reading part 1, which compares the features and specifications of both products, and part 2, which puts Lambda and Cloud Run head-to-head with a variety of benchmarks.

In this final part, we’ll be looking at different use cases for the two services based on personal experience, suggestions for improvement, and a summary of the key findings from previous posts.

Functions vs. Containers

It’s worth addressing some terminology first, as it can affect the perception of the purpose of each service. After all, the word “function” is everywhere with AWS Lambda, whereas Cloud Run talks about containers and containerized applications. And, in general, when it comes to categorizing compute services, more and more buzzword abbreviations ending in “aaS” are being created.

While a Lambda function can be as small as a single line of code, and a Cloud Run container runs a full-blown OCI image (commonly referred to as a Docker image), the main technical difference is the packaging format. Initially, it was only possible to write Lambda functions in a handful of programming languages—based on the supported runtimes provided by AWS—while containers have no such restrictions.

However, since the introduction of custom AWS Lambda runtimes in 2018, this has no longer been an issue. The difference that remains today is that Cloud Run uses an open standard for packaging, while Lambda continues to use a proprietary, albeit well-documented, packaging format.

Actually, Lambda function packages can be of a significant size (up to 50 MB compressed and 250 MB uncompressed), include shared libraries, and even arbitrary executables that functions can run as child processes. So no, Lambda is not restricted to small functions that perform simple tasks.

Do you need a GCP expert to create content for your tech blog? Contact IOD to find out how you can work with our tech bloggers.

Four Use Cases

Both Cloud Run and Lambda integrate with many other cloud services, but it should be no surprise that Lambda has a longer list of integrations. Lambda has been around the longest, and AWS, in general, offers more services. Still, there is a lot in common in regard to what can be achieved, with a few differences worth highlighting.

There are also some differences in how integrations are set up. For example, an S3 bucket can directly trigger a Lambda function, while a Cloud Storage bucket requires a Pub/Sub topic and subscription to trigger a Cloud Run service.

Data and Stream Processing

Data and stream processing have always been excellent use cases for serverless computing due to their pricing and scalability models. The ability to quickly handle any amount of data in near real time without any capacity planning or idle computing costs is, to this day, the biggest strength in these product offerings.

And since many (arguably most?) data-processing tasks are CPU intensive, AWS Lambda continues to be an outstanding choice for these. As we concluded from the benchmarks, not only does Lambda offer better CPU performance, it also has a lower cold start time, which produces faster results from data-processing tasks.

Lambda is also likely to be cheaper, as, for CPU-bound tasks, there may be little or no advantage to Cloud Run’s concurrency model. As we previously concluded, high concurrency in a single container is the only way to bring Cloud Run’s price down.

Application Backends

Serverless application backends have some great benefits. The biggest one is probably the ability to handle variable and even unpredictable amounts of traffic without worries (do you also see a pattern here?). But users of your applications expect a certain level of responsiveness, so combating cold starts is crucial, especially in synchronous operations such as these.

Most commonly, applications communicate with their backends using HTTP, whether they use REST, GraphQL, or something else. Both Lambda and Cloud Run support HTTP requests, but in vastly different ways. It is not possible to expose a Lambda function directly to the public internet. However, it’s possible to invoke Lambda functions using Amazon API Gateway, Elastic Load Balancing, or even AWS AppSync for GraphQL.

However, the fact that Cloud Run uses HTTP natively makes it well-suited for application backends. It’s still possible to use a managed API gateway such as Cloud Endpoints, but it’s not required. And that’s not all: Cloud Run also supports larger request and response payloads and gRPC, making it a great contender to deploy microservices that traditionally would need an orchestrator such as Kubernetes.

In addition, because backends typically communicate over the network with other services or data storage, they are, more often than not, I/O-bound. And as we were able to prove in the benchmarks, Cloud Run does particularly well in high-concurrency, I/O-bound workloads, especially from a cost perspective.

Edge Computing

Edge computing may be another trendy buzz phrase, but that doesn’t mean there aren’t valid use cases for computing near users. And while AWS has over 20 regions today, it does have over 200 points of presence (PoPs) in more than 40 countries as part of its CloudFront CDN offering.

Now, this is something that Google Cloud doesn’t currently support. It’s a genuinely fantastic feature, where restricted Lambda@Edge functions can run directly at the CDN PoPs. And even though it’s currently limited to Node.js and Python runtimes and has lower timeouts and payload sizes, it still allows for a vast range of use cases such as content personalization and analytics.

Orchestration

One of the challenges of developing microservices is reliably orchestrating multiple subtasks that need to run in a particular order, be retried on failure, and tracked to completion. AWS Step Functions takes care of all this in a simple, pay-as-you-go model.

Google Cloud, on the other hand, doesn’t have such an offering. There is Cloud Composer, a managed Apache Airflow service. However, the pricing is based on provisioned VMs, and it’s currently not listed as a service supported by Cloud Run.

Room for Improvement

As with everything, neither service is perfect, and each could learn and take some features from the other.

For Lambda, it would be really great to see an option to configure the concurrency level above 1. Such a feature wouldn’t only be a cost-optimization; it would also potentially get rid of some cold starts. Supporting container images in Lambda would also be a fantastic addition. However, it’s worth noting that AWS Labs has already released an open-source tool to do precisely this. Perhaps we’ll see this feature natively supported in the future. I sure hope so!

For a service introduced only last year, Cloud Run has already come very far. I found the performance to be a bit inconsistent compared to Lambda between calls, and not as good in terms of cold-start times and raw CPU performance. I hope Google Cloud will eventually address these shortcomings.

But the more noticeable improvement, in my opinion, would be the ability to set a reserved container instance count, similar to Lambda’s reserved concurrency. Estimating cost was something I also found a bit hard to do. It’s unpredictable (and undocumented) how Cloud Run decides to make concurrent requests to an existing container instance versus spawning a new container. My testing found that the actual number of simultaneous requests handled by a single container instance was much lower than the defined concurrency limit.

Some Key Points to Remember

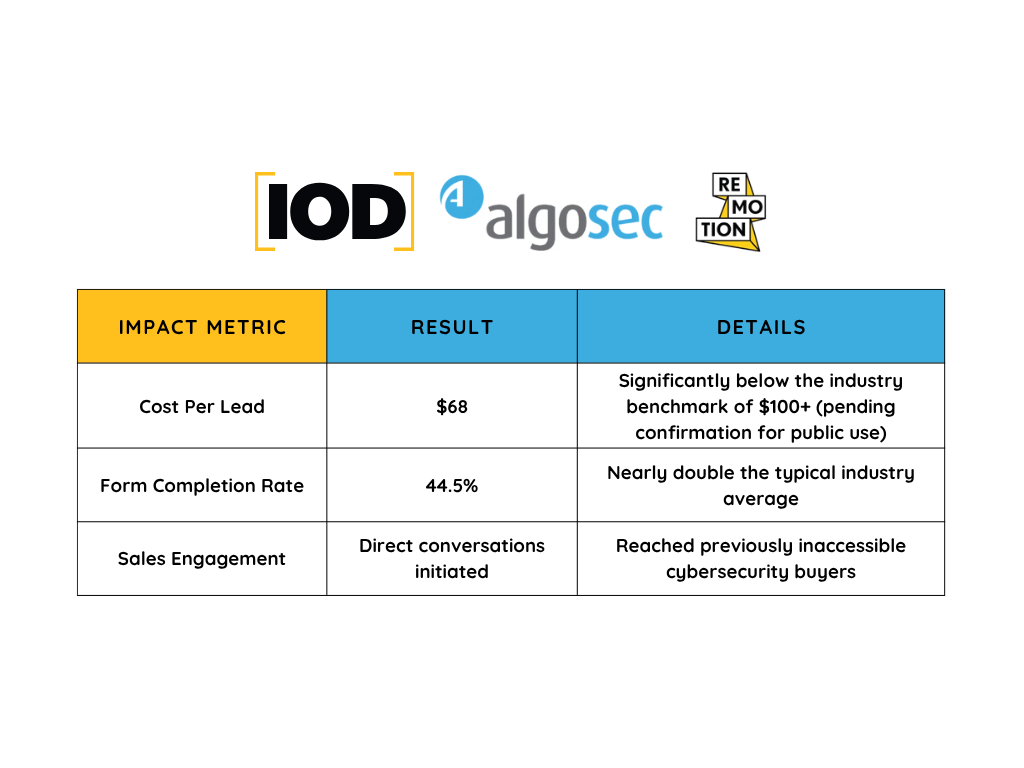

Before we go, let’s recap and summarize some of the key points from past posts. (Bold italicized text below indicates the better value.)

AWS Lambda | Google Cloud Run | |

Max Execution Time | 15 minutes | 15 minutes |

Max Payload Size | 6 MB | 32 MB |

Max Memory | 3008 MB | 2024 MB |

Max Deployment Package Size | 50 MB (zipped) 250 MB (uncompressed) | No limit |

Packaging Format | Proprietary | Open (OCI Image) |

Max Concurrency per Instance | 1 | 80 |

Sysbench Performance (Single-threaded, average) | 1047 events/sec | 880 events/sec |

Sysbench Performance (Multi-threaded, average) | 1752 events/sec | 1476 events/sec |

Cold Start (median) | 210 ms | 1090 ms |

VPC Cold Start (median) | 404 ms | 1232 ms |

Latency (ms) | 51 ms | 32 ms |

For more context, be sure to check part 1 and part 2 of this series.

Is Cloud Run a Game Changer?

First of all, if you’ve made it through all three posts in the series—congratulations! It can be a lot of information to digest, but I sincerely hope it’s been helpful. While this last part contains personal opinions based on the previous posts, I hope you can also draw your own conclusions, particularly from the benchmarks.

I find both products to be quite sufficient for most use cases, and while Lambda does have some limitations, it also shows its maturity as a leader in the serverless compute field. I’ve personally used both services, and will happily continue to do so, based on which cloud provider is already in use. I would also be much more likely to choose Cloud Run for new deployments than Cloud Functions. And if you want to find a comparison between AWS Lambda and Azure Functions, be sure to also check our article on the topic.

To conclude, do I think Cloud Run is a serverless game changer?

The fact alone that it’s built on open standards such as the Knative Serving API and OCI images, and that a compatible open-source solution exists (Knative) that can be deployed anywhere, is enough for me to say that yes, it is. And the fact that it’s offered by a leading cloud provider is just the cherry on top.

Do you want expert-based content like this comparison for your tech blog? Hire us.