Serverless comes with the promise of infinite scalability, but what does this mean in practice? Services like AWS Lambda don’t magically scale up to thousands of parallel invocations in an instant. If a new invocation is started when another one is already running, this will lead to a cold start, the specter of serverless. And thousands of invocations that are started in parallel will, in turn, lead to thousands of cold-starts.

If you want to understand how your Lambda functions behave under load, a load test can give you some key information to help. In this post, I’ll take you through how to load test AWS Lambda functions using a sample app.

What Is Load Testing?

Load testing is a way of checking how your system behaves under specific types of loads. This can mean testing different numbers of clients sending requests to your API in terms of web APIs. For example, you can go from 100 to 10,000 clients sending a request in parallel and see if something changes.

What Is AWS Lambda?

AWS Lambda is the function-as-a-service solution from AWS. It’s mainly used for gluing together different components of your systems if they don’t integrate directly, like an API Gateway and a third-party service, or processing huge amounts of data in parallel, for example, when analyzing log data.

Load Testing AWS Lambda

Let’s build a small web API based on AWS Lambda and AWS API Gateway and use different load testing tools to determine how the API behaves.

Prerequisites

I assume that you have an AWS account and AWS CDK installed.

Creating a Sample CDK Project

The first step in your journey is initializing a new CDK project with the AWS Lambda and AWS API Gateway packages. For this, you can use the following commands:

$ mkdir test-api && cd test-api

$ cdk init app -l=javascript

$ npm i \

@aws-cdk/aws-apigatewayv2 \

@aws-cdk/aws-apigatewayv2-integrations \

@aws-cdk/aws-lambda

Implementing the Sample API

The infrastructure code for the API will be implemented inside the lib/test-api-stack.js file:

const cdk = require(“@aws-cdk/core”);

const lambda = require(“@aws-cdk/aws-lambda”);

const apiGateway = require(“@aws-cdk/aws-apigatewayv2”);

const integrations = require(“@aws-cdk/aws-apigatewayv2-integrations”);

class TestApiStack extends cdk.Stack {

constructor(scope, id, props) {

super(scope, id, props);

const httpApi = new apiGateway.HttpApi(this, “HttpApi”);

this.createLambdaRoute(

httpApi,

“FastFunction”,

“/fast”,

`exports.handler = async () => ({

statusCode: 200,

body: “FAST”

});`

);

this.createLambdaRoute(

httpApi,

“SlowFunction”,

“/slow”,

`const sleep = (ms) => new Promise((r) =>

setTimeout(r, ms));

exports.handler = async () => {

await sleep(3000 * Math.random());

return { statusCode: 200, body: “SLOW” };

};`

);

new cdk.CfnOutput(this, “apiUrl”, {

exportName: “api-url”,

value: httpApi.url,

});

}

createLambdaRoute(httpApi, name, path, code) {

httpApi.addRoutes({

path,

methods: [apiGateway.HttpMethod.ANY],

integration: new integrations.LambdaProxyIntegration({

handler: new lambda.Function(this, name, {

runtime: lambda.Runtime.NODEJS_12_X,

handler: “index.handler”,

code: lambda.Code.fromInline(code),

}),

}),

});

}

}

module.exports = { TestApiStack };

This web API uses AWS API Gateway with two routes, each backed by an AWS Lambda function. The Lambda functions are tiny, so they are defined inline.

The /slow endpoint has random running times of around 3 seconds, which sometimes means it will run longer than the default timeout of AWS Lambda and fail.

In the end, an output will give you the base URL of the web API to use for the load testing later.

Deploying the Sample API

To deploy the sample API, use this command:

$ cdk deploy

This will take a few seconds; after the deployment has succeeded, you will see the sample API URL as the last output. The URL will look something like this:

https://.execute-api.amazonaws.com/

Are you a tech blogger?

Four Tools for Load Testing

Since load testing isn’t specific to serverless applications, there are many solutions out there to use. Some of these solutions are open-source libraries, some are created by AWS, and some companies offer load testing as a service.

Serverless systems scale pretty well, so testing them with JMeter running on your local machine can only go so far. The solutions mentioned here often use serverless, or at least cloud-based approaches to match the scaling features of the system they test.

When you are asked for the URL to test, you have to choose one of the API endpoints you created:

https://.execute-api .amazonaws.com/fast

https://.execute-api..amazonaws.com/slow

1. BlazeMeter

BlazeMeter is a managed service offering from Broadcom and probably the quickest solution here to get started with. Directly after signing up, BlazeMeter greets you with a wizard that helps you create your first load test step by step.

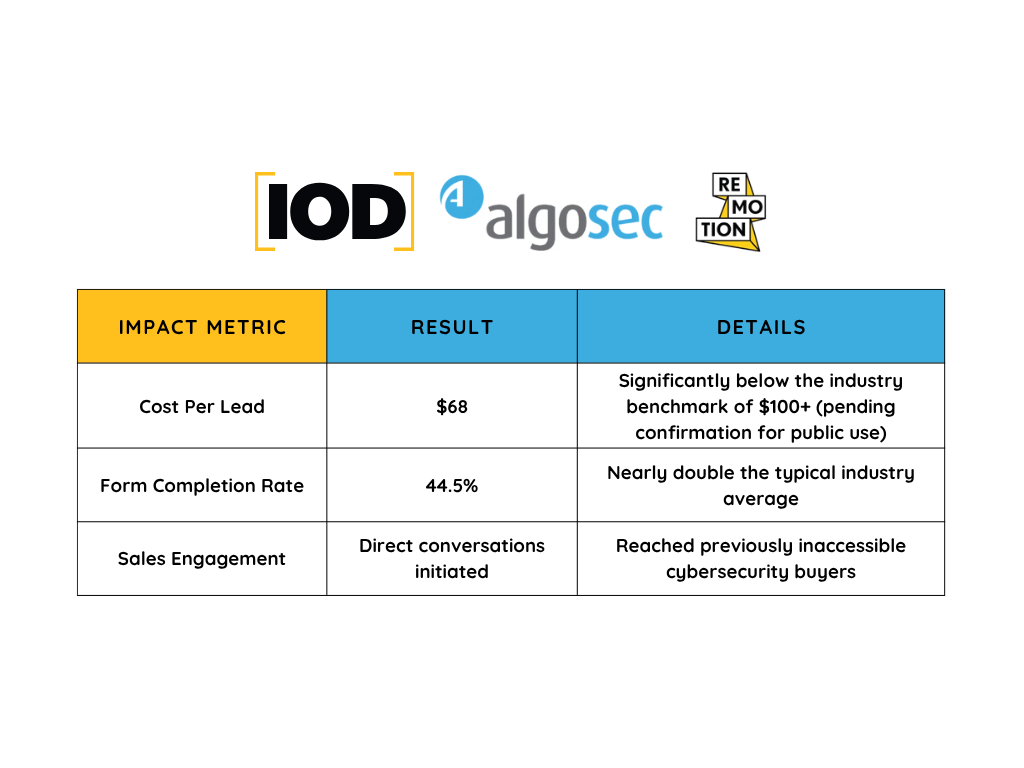

Figure 1 shows the results of such a simple load test for our /slow API endpoint:

The default load test is run with 20 parallel users, but you can also configure it to your liking. With basically no setup, you get a very detailed insight into your API’s performance behavior.

2. Distributed Load Testing on AWS

AWS’ own solution for load testing, this CloudFormation template will deploy an infrastructure onto your AWS account that comes with a web-based UI for setting up load tests.

The whole stack is a serverless application, and it starts containers via AWS ECS Fargate to run the load tests, so you can scale up as needed. The containers use a Taurus Docker image, which wraps JMeter for you.

You can easily install this onto your AWS account with just a few clicks; an email will notify you when the deployment is ready.

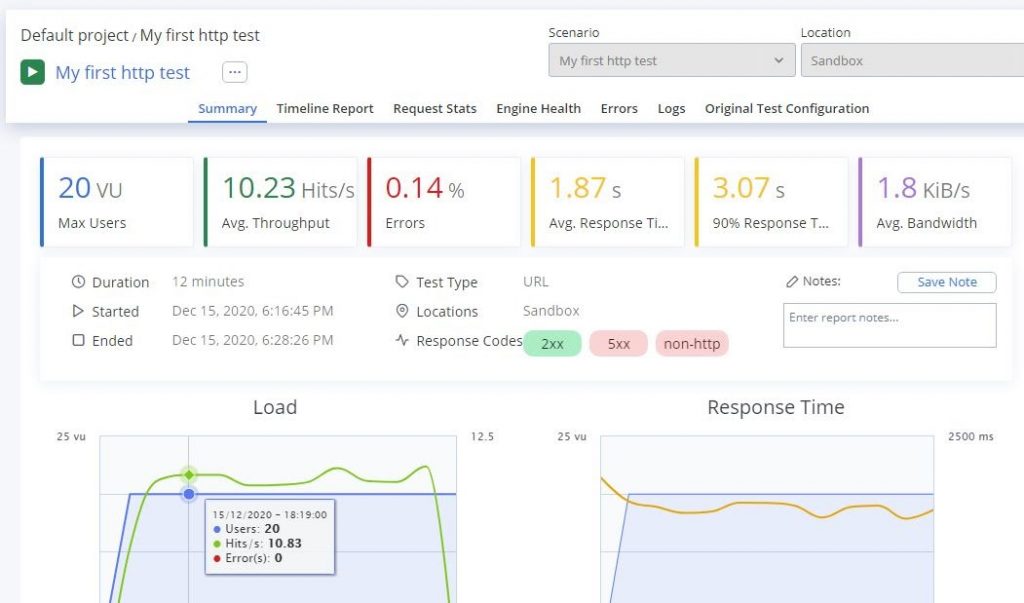

In Figure 2, you can see the results of a basic test scenario:

3. Wrk

Wrk is a more minimalistic approach to load testing. It’s a highly optimized CLI tool “capable of generating significant load when run on a single multi-core CPU,” as stated on GitHub.

To set Wrk up, you need a Linux or Unix system. Clone the repository from GitHub, and compile it yourself. I ran it on an AWS Cloud9 system because it comes pre-installed with many compilers and build tools.

$ git clone git@github.com:wg/wrk.git

$ cd wrk

$ make

Then, while still inside the cloned directory, run the following command (Don’t forget to replace API_ID and AWS_REGION with the right values!):

$ ./wrk -t12 -c400 -d30s \

https://.execute-api..amazonaws.com/slow

The test will take about 30 seconds and then output its results into the terminal. The following output is taken from a test run on my machine:

Running 30s test @ …

12 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 990.87ms 569.91ms 2.00s 58.34%

Req/Sec 22.88 13.11 80.00 78.93%

7668 requests in 30.07s, 1.31MB read

Socket errors: connect 0, read 0, write 0, timeout 2514

Non-2xx or 3xx responses: 7

Requests/sec: 254.98

Transfer/sec: 44.58KB

The results aren’t as fancy as with the other services, but you see how many requests led to an error and how the latency behaved over the course of 10,000 requests.

While the numbers aren’t as comprehensive as other tools, Wrk gives you a good overview of how your endpoints behave. It’s also open-source and runs on your own machine, which can be a nice thing if you need or want this.

4. K6

K6 is a hybrid solution for load testing. It is open-source and can run on your own machine, like Wrk, but it also has a service offering that will automatically run your test cases in the cloud without any code changes.

I will run k6 on AWS Cloud9, a web-based IDE that uses an EC2 instance with Amazon Linux 2, so I’ll follow the related installation instructions (The docs have instructions for other systems if you need them.):

$ wget https://bintray.com/loadimpact/rpm/rpm \

-O bintray-loadimpact-rpm.repo

K6 tests are defined as JavaScript files, so you need to create one for your sample API with the following content:

import http from “k6/http”;

import { sleep } from “k6”;

export default function () {

http.get(“https://.execute-api..amazonaws.com/slow”);

sleep(1);

}

Again, don’t forget to replace API_ID and AWS_REGION with the correct values. Save the file as test.js, and then run this command:

$ k6 run test.js

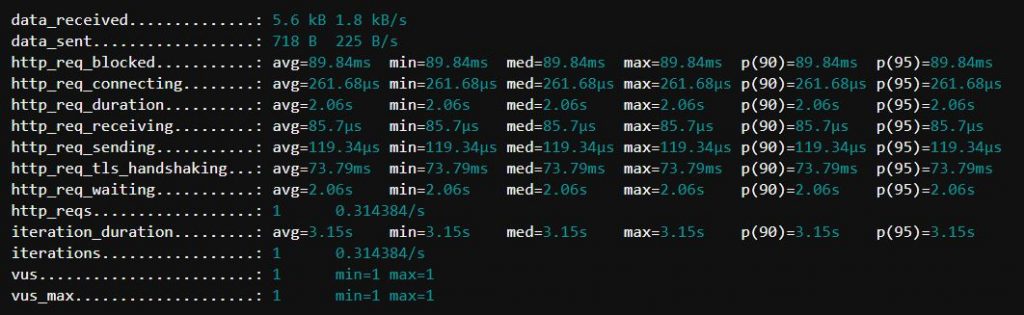

The results come in the command line, like with Wrk, but are a bit more detailed, so I included a screenshot of them to give you a feeling of what to expect:

Average, median, and even p90 and p95 come out of the box—very helpful! And as I said, the whole script can be run on the k6 cloud service without any changes.

Just sign up to k6, and they will give you a token to log in via their CLI tool:

$ k6 login cloud -t

Next, run the CLI tool with the cloud command:

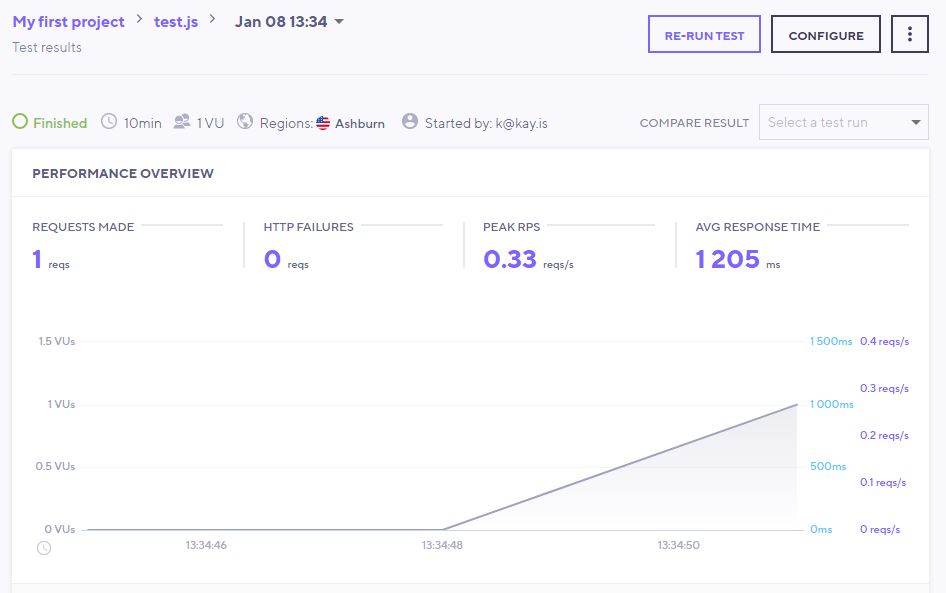

$ k6 cloud test.js

This time, the script will run on the servers of k6, and you can see the results on their website, like with BlazeMeter:

Summary

There are different approaches to load testing. Depending on your requirements, some tools will be better suited than others to your organization.

Do you want to stick to open-source software? Then Wrk and k6 are the way to go.

Do you want to run your own testing infrastructure in the cloud? Then AWS’ distributed load testing is a good solution.

If you don’t want to own or maintain a testing solution, k6 and BlazeMeter will do all the hard work for you.

With k6, you can also switch between local and cloud-based testing if your use cases require it.