By default, Kubernetes pods and services cannot be accessed outside of the cluster. At some point, however, you may want to turn your K8s applications into full-fledged web services accessible over the Internet. The Kubernetes Ingress resource is one of several ways to accomplish this.

Kubernetes Ingress allows you to configure various rules for routing traffic to services within your Kubernetes cluster. However, Ingress is not just that. You can use an advanced K8s Ingress solution to load-balance traffic; terminate SSL/TLS; implement name-based virtual hosting; and enable API authentication and security, monitoring, and more. Whether you need a simple reverse proxy that routes traffic to a specific service, or a more advanced setup with traffic middleware and complex traffic-splitting rules, depends on the requirements of your applications.

In this article, we’ll try to guide you through various K8s Ingress solutions and use cases to help you find the best K8s Ingress option that fits your needs.

K8s Ingress Basics

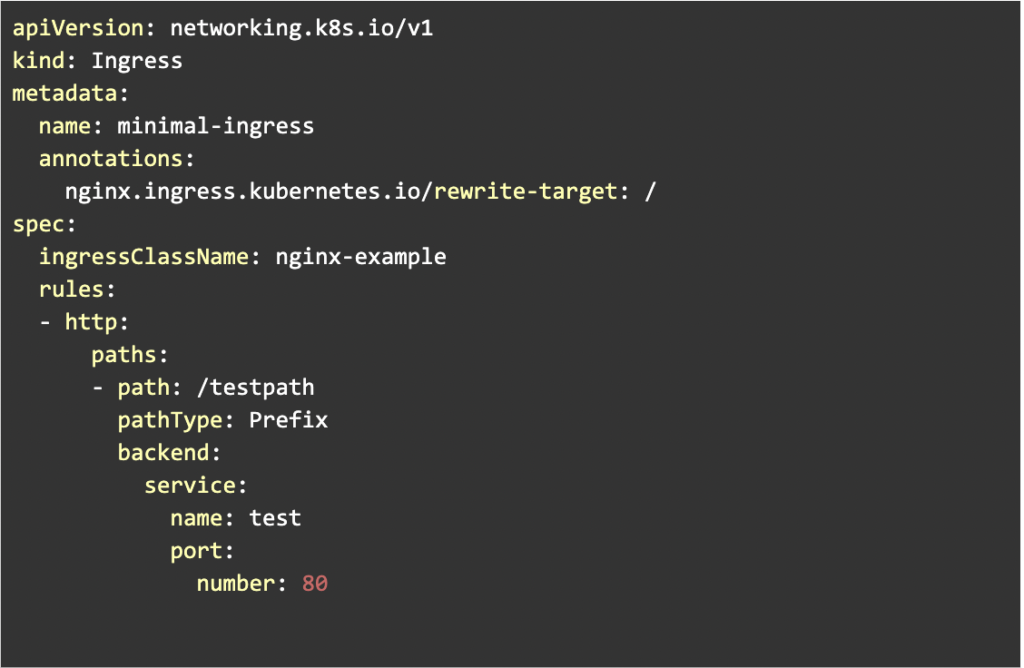

Before we dive into the discussion of various K8s Ingress solutions, let’s see how ingress is implemented in Kubernetes. K8s has a built-in resource that defines the configuration needed for an ingress controller and services it can route to. In a nutshell, the K8s Ingress resource is just a K8s metadata object that defines URI paths, backing service name and ports, and other metadata (see code example below).

However, on its own, Ingress does nothing. In order to work, you have to deploy an Ingress controller and associate the K8s Ingress resource with it.

K8s lets you create multiple Ingresses, which is useful when you want to use one Ingress for external traffic and another one for in-cluster traffic between services.

Key Things to Consider When Selecting K8s Ingress

The choice of the Kubernetes Ingress controller directly depends on the requirements of your application, the location of your K8s cluster (e.g., on-premises or in the cloud), the design of your microservices architecture, security compliance requirements, and more.

Below, we list several important things to consider when choosing the right K8s Ingress solution.

Protocol Requirements

Traditional APIs typically use standard Layer 7 protocols such as HTTP(s). If your K8s services are based on HTTP(s), you can probably opt for some simple reverse proxy solution. However, this may not be enough if your API relies on some other protocols, including binary protocol HTTP/2, gRPC, and WebSockets.

Also, if your API needs to route low-level traffic, the K8s Ingress controller has to support low-level Layer 4 protocols such as TCP/UDP.

Downtime Tolerance

Most statically configured reverse proxies and ingress controllers are reloaded on the configuration update. This causes short downtimes and increased memory consumption when your application reloads. Correspondingly, users will not be able to access your services at that time.

If you need zero downtime for your API services, a K8s Ingress that supports automatic service discovery and dynamic reconfiguration may be a more preferable option for you.

Middleware

Modern API services require various middleware for managing traffic. This may include rate limiting for controlling the traffic load on your service, circuit breakers for preventing error loops, authentication middleware, etc. K8s’ advanced Ingress controllers have this middleware configured at the API gateway level.

Because of this, developers do not need to code special traffic management rules into the application and can instead focus on building a product.

Extensibility

If you don’t know all API and application requirements in advance, you can opt for a more flexible and extensible Ingress solution that lets you add custom plugins and modules for extra functionalities. Unfortunately, not many K8s Ingress solutions are built with such extensibility in mind.

Regulatory Compliance

If your company works in a highly regulated industry, you may need an Ingress solution that complies with strict security standards like Federal Information Processing Standards (FIPS).

Also, for hardening your API security, you may opt for a K8s Ingress solution that supports a web application firewall (WAF) and includes built-in protection against common web vulnerabilities like the OWASP Top 10. Luckily, many K8s Ingress solutions do include security compliance as part of their paid subscription.

Overview of Ingress Solutions

Let’s review some popular Ingress solutions and see how they address the requirements listed above.

NGINX Ingress

The NGINX Ingress Controller is based on the NGINX reverse proxy and is one of the most popular ingress technologies for Kubernetes with over 50 million Docker pulls. The product ships in three versions: a community version supported by the F5 NGINX project and two official NGINX versions, a free OSS version and an NGINX Plus commercial version.

Both community and open-source NGINX versions are quite simple integrations of the NGINX reverse proxy with the Kubernetes Ingress resource. When using these open-source versions, you get basic HTTP(s) routing functionality and SSL features. On the protocol side, the free versions provide support for TCP/UDP protocols and allow for gRPC integration and WebSockets. At this moment, integrating non-HTTP protocols requires additional configuration and engineering effort.

A major limitation of the free NGINX Ingress is that it’s statically configured, which leads to downtime due to configuration updates—not optimal if your application requires zero downtime.

In sum, if you need a simple ingress solution that routes HTTP(s) traffic to K8s services, these two open-source versions may be enough. The official NGINX OSS version is more stable and backward-compatible than its community-developed counterpart since it does not rely on external third-party tools and Lua modules. It’s fully managed by the NGINX team and any tools and modules used in it undergo extensive interoperability testing.

Thus, the official NGINX OSS is preferable if you need a stable ingress product with 100% backward compatibility.

The NGINX paid version is a more advanced ingress implementation with many useful features. In particular, it supports advanced traffic policies (rate limiting), IP ACL, mTLS, and JWT validation. If your app needs to implement IT Ops, you can leverage NGINX Plus support for A/B testing and canary deployments. Also, the paid version has all the building blocks for security compliance including implementation of WAF security middleware, JSON schema validation, and OWASP Top 10 protection.

It should be noted that NGINX Plus is one of the few K8s Ingress solutions that implement a WAF, which it does via NGINX Protect. Thus, NGINX Plus covers pretty much all the ingress requirements mentioned above.

Are you a tech blogger?

Kong Ingress Controller

The Kong Ingress Controller is based on NGINX Ingress but with the inclusion of additional modules and plugins. At this moment, the Kong ecosystem has over 400 enterprise and community plugins for traffic management, API security, monitoring, and more. These plugins are available from third-party developers and are often easy to configure and use.

There are various plugins for non-HTTP protocols as well, including gRPC and HTTP/2, request middleware, declarative configuration, and advanced load-balancing algorithms. Also, there are Kong plugins that support OpenID Connect, Open Policy Agent, WAFs, and other security features. If there is no plugin that matches your exact needs, you can create a new one using a well-documented plugin development kit (PDK).

Not all Kong Ingress plugins are available for free though. Kong Ingress ships in two versions: the Kong Gateway OSS version and the Enterprise version. The OSS version offers basic API gateway features and open-source plugins. With Enterprise, you get access to a wide range of enterprise plugins, a dev portal (to generate an API and manage API versions), vitals (API analytics and monitoring), and RBAC. Also, Kong Enterprise provides a FIPS 140-2-compliant gateway, ideal for highly regulated industries.

In sum, the built-in extensibility of the Kong platform means that with the necessary effort, you can achieve feature parity with NGINX Plus and implement many new features not covered by competing ingress solutions.

Cloud Ingress

Cloud ingress requires an external cloud-based load balancer to route traffic to Ingress resources. On the Google Cloud Platform (GCP), for instance, an HTTP(s) load balancer is automatically created when you deploy Ingress.

The major benefit of a cloud-based ingress controller is seamless integration with various cloud services offered by a cloud platform. If you’re running K8s in the cloud, this is a great advantage. For example, the GCE Ingress controller directly integrates with GKE’s cloud IAP, which lets you use Identity-Aware Proxy for the protection of K8s applications. Meanwhile, the Amazon ALB controller creates an Application Load Balancer that integrates with AWS WAF, Cognito, and Route 53.

The main disadvantage of cloud-based ingress is that you have to use the specific cloud ingress controller offered by your cloud provider, which leads to vendor lock-in. Also, since cloud ingress requires a load balancer, you will incur additional costs. Load balancers are not free and can be quite expensive, especially if your K8s cluster has many applications and you have to install multiple ingresses.

Other potential problems to consider with cloud ingress include the following:

- Additional operational complexity. Load balancers require deployment and configuration of various resources such as IPs and DNS certificates, which may be hard to manage in the highly dynamic environment of K8s.

- Performance. Most load balancers are not built for performance, which may be a problem if you need to have multiple ingresses.

- Cloud quota. Cloud projects have a quota for backend services. For example, by default GCE projects grant a quota of three backend services. This may be insufficient for most Kubernetes clusters.

- Load balancing. Not all ingress controllers support fine-grained control over load balancing algorithms

- Cluster size. Most cloud-based ingresses do not support large K8s clusters (1,000+ nodes).

Conclusion

As this article demonstrates, there are many factors to consider when choosing the right ingress for your clusters. If you’re planning to run a K8s cluster in the cloud, you have pretty much no other choice but to use the cloud-provided Ingress controllers and load balancers. When considering whether to use your K8s clusters in the cloud or on-premises, you should definitely consider the performance, quota, and cluster-size limitations of cloud ingress. For most applications that run in the cloud, including medium-size K8s clusters, cloud ingress offers many benefits including direct integration with other cloud services.

If your API does not have strict compliance requirements and can use simple traffic policies, you can opt for the free or community NGINX Ingress. The free NGINX Ingress provides better stability and security because it’s directly managed by the NGINX team.

The Kong API gateway is an excellent choice if your API services need to be highly flexible and you want to have additional plugins. However, some plugins may only be available in the paid Kong version. Also, having multiple plugins may introduce compatibility issues and require more configuration efforts on the part of your engineering team.

Finally, NGINX Plus may be a preferred solution if your K8s cluster requires strict regulatory compliance and API security. Also, the paid NGINX version meets all other K8s Ingress criteria discussed in this article.