The popularity of Kubernetes, or K8s, has been growing exponentially in recent years, quickly becoming the de facto platform for organizations to run and orchestrate their container workloads. An open-source project born at Google and incubated by the Cloud Native Computing Foundation, Kubernetes has completely changed the way engineering teams design, develop, and operate highly scalable container-based systems.

Despite Kubernetes’ extensive list of benefits, such as portability, flexibility, multi-cloud capabilities, and a great developer experience, it is a known fact that operating and managing Kubernetes clusters on your own is no easy feat. Luckily, the major public cloud providers offer managed Kubernetes services: AWS Elastic Kubernetes Service (EKS), Microsoft Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE). These can be leveraged to significantly lower the operational overhead associated with managing a K8s infrastructure.

Looking at Managed Kubernetes Services from Different Angles

At first glance, managed Kubernetes service offerings all seem quite similar regardless of the cloud provider you choose. While this initial assessment is not entirely wrong, it’s important to get to know the ecosystem provided by each option and examine all three orchestrators from diverse angles.

The key selling point of a managed Kubernetes service is easy operational management. Amazon, Microsoft, and Google all offer engineers the ability to maintain full control over the nodes and containers within the cluster while delivering a fully managed control plane—which they can’t fully control but is compatible with the majority of the popular K8s tools (e.g., eksctl, kubeadm).

But there are several other considerations, which I’ll cover here below.

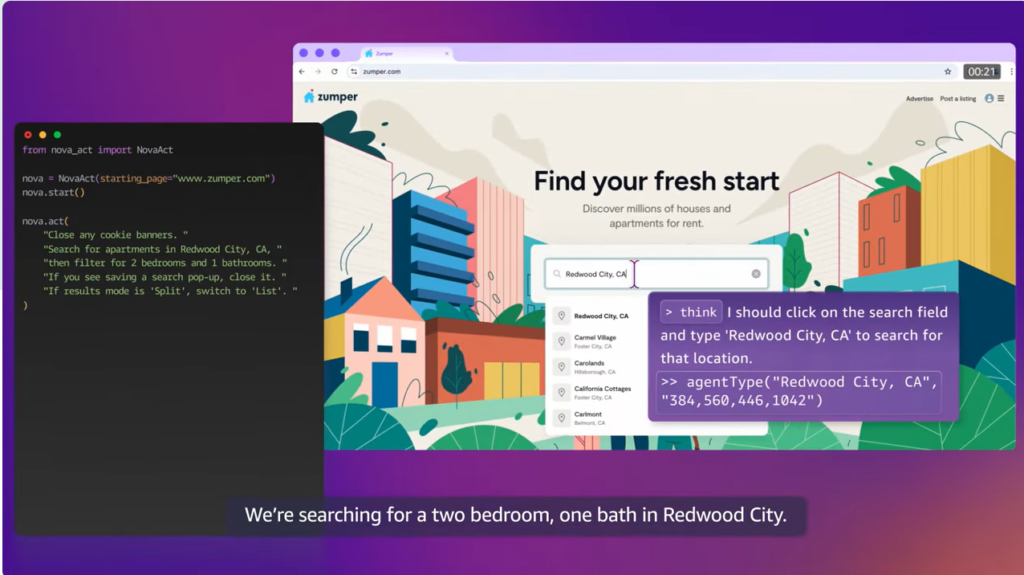

Developer Experience & Automation

An often overlooked selling point is the automation and built-in integrations available with other services within a cloud provider’s ecosystem. The developer experience is greatly improved when, in addition to Kubernetes-specific tools like Helm or Kubespray, engineers can also use provider-managed services, such as centralized logging and metrics via AWS CloudWatch, Azure Application Insights, and Google Operations.

When it comes to continuous integration and continuous deployment (CI/CD), developers are free to use any tool they wish, but the provider’s built-in service is often the smoothest path forward if starting from the ground up.

The services of choice for CI/CD from each provider are AWS CodeStar, Google Cloud Build, and Azure DevOps. While all three are quite good and provide the core functionalities you’d expect, Azure DevOps clearly stands out with a true holistic development experience that includes tailored Kubernetes features. Also, it’s worth keeping in mind that Microsoft owns GitHub; while you can of course use GitHub with non-Azure environments, it goes to show the commitment Microsoft is making toward CI/CD and the developer experience.

A key part of the configuration and deployment of Kubernetes clusters is setting up infrastructure-as-code templates. Tools like Kubespray, which enables the usage of Ansible for provisioning and configuration, or Terraform, which enables infrastructure deployment configuration with multiple cloud providers, can be used in a provider-agnostic way with any of the managed K8s options. However, if you’re already using a tool specific to one cloud provider, such as AWS CloudFormation, Azure Resource Manager (ARM), or Google Cloud Deployment Manager, you can continue using them with each respective provider’s K8s service. Note, CloudFormation and ARM are definitely very popular choices with very strong communities behind them.

Are you a tech blogger?

Virtual and Serverless Computing Options

AWS, Azure, and Google offer managed Kubernetes services that will give you a very familiar computing option for its worker nodes: virtual machine instances. Both the default and classic options give you full control over how the nodes are installed and configured while keeping the managed benefits, thanks to the built-in control plane and native capabilities such as autoscaling and load balancing.

However, computing options for managed Kubernetes services go well beyond traditional virtual instances. Traditionally, the words “Kubernetes” and “serverless” wouldn’t be found in the same sentence. But this is quite a misconception when it comes to managed K8s orchestrators. The barrier between both worlds has been shattered with projects such as virtual-kubelet and Knative.

With AWS EKS, customers have the ability to run workload pods using Fargate, a serverless container runtime environment. AKS offers the same capability using the Azure Container Instances service. With GKE, while the same type of feature exists via Knative, one significant difference is that when Google launched the Knative project, they decided to open it up to other industry partners to collaborate, including IBM, Red Hat, VMWare, and Pivotal. While the project is still fairly young, it’s gathering attention from big tech companies, so it will be very interesting to see how it evolves.

Storage & Data Persistency

While serverless pods give a new edge to rapid growth and scalability without the inherent operational hassle, this new way of running containers in Kubernetes is usually only recommended for stateless types of workloads, i.e., applications that are processing information but don’t have a need to store it locally. Services like Fargate and Azure Container Instances (ACI) come with a lot of limitations when it comes to storage and data since you can’t provision a local persistence volume.

A common approach to overcome those challenges is to use a network-file storage service such as Google Filestore, AWS EFS, and Azure Files; these enable containers to access a filesystem volume over the network. Contrary to local disk volumes, this approach also enables multiple running container applications to access the same filesystem. However, this parallelism can create additional challenges when it comes to concurrency and complexity. In terms of throughput and speed, local persistent disks have a clear advantage, but over the last few years, third-party partner services like NetApp have emerged. These can provide greater performance than the built-in network storage services and offer additional features such as easier management and data hybridity across multiple environments.

Pods that run on virtual instances are the typical approach for stateful workloads. In addition to the network file storage option, the built-in block storage services (Azure Storage, AWS EBS, and Google Cloud Persistent Disk) in each cloud provider are also a natural option when using virtual instances. The managed Kubernetes control plane has a native integration with the provider’s own service, making it effortless for engineers to create and destroy persistent volumes using their familiar Kubernetes tools.

The Big 3 cloud providers’ services for block and network storage are fairly similar in terms of capabilities. If storage and data management is an area where you have demanding requirements, you should definitely take a look at solutions offered by the cloud providers’ partners.

Hybrid Environments & Network Connectivity

Amazon, Microsoft, and Google have been in a race to dominate hybrid and multi-cloud environments and acquire customers that have been traditionally averse to the cloud due to massive on-premises investments or already opted for a competitor’s product.

Kubernetes, due to its own open and multi-environment characteristics, has been key to unlocking multi-cloud and hybrid offerings. Google and Microsoft have been investing heavily on this front as well, launching the Google Anthos and Azure Arc products that enable organizations to deploy managed Kubernetes clusters outside the provider’s infrastructure into any environment they choose, including their own competitors. This lets you simplify resource governance and develop software in a streamlined way.

Meanwhile, AWS, typically adverse to hybrid or multi-cloud scenarios, launched Outposts. While this also enables the deployment of managed Kubernetes clusters outside of AWS’ infrastructure, Outposts comes with its own dedicated hardware and does not allow customers to use their existing hardware capacity nor competitor clouds. At re:Invent 2020, AWS announced EKS Anywhere (coming in 2021) to enable EKS deployments in non-AWS environments.

Costs

When it comes down to pricing, there are some nuances to take into account. With AWS EKS, you pay a fee of $0.10 per hour/cluster, while the computing and storage is billed as per the chosen service’s (Fargate, EC2, EBS, EFS) usual rate. With Microsoft AKS, there is no cluster management fee, and the cost for computing and storage is also charged separately according to capacity. However, an optional fee of $0.10 per hour/cluster is required for improved Uptime SLA. Google GKE used to offer free cluster management but now charges $0.10 per hour/cluster while allowing one free zonal cluster per account. The computing and storage costs follow the same pricing as their built-in services (Persistent Disk, Compute Engine, among others).

One often overlooked aspect is that all Big 3 cloud providers allow their customers to get additional savings by committing to yearly reserved capacity plans. This can bring down costs significantly for your K8s orchestrator when you have predictable workloads. It can’t be emphasized enough that you need to understand the levers and tools at your disposal when it comes to managing cloud costs.

Looking Ahead of the Curve

If the last few years are any indication, the upcoming months will be full of surprises for the cloud and Kubernetes. You can expect more convergence between the functions-as-a-service and container worlds, with hybrid and serverless capabilities taking a prominent role. Multi-cloud and hybrid scenarios will be highlighted and more features should appear to make technology governance easier. New types of Kubernetes users and business cases such as MLOps and technologies such as Red Hat OpenShift in the public cloud will help to shape the months ahead. And let’s not forget that with AWS Re:Invent on now, new announcements are continuous: Expect many new interesting features and services related to containers and Kubernetes.

If you choose to use Kubernetes, you should look into the managed service offerings of all three managed cloud providers. Simplified management and an easing of the operational burden from each should allow you to focus on what’s most important.