If you’re a person working in DevOps or on a software development team, you’ve probably heard the expression “You build it, you run it,” coined by Werner Vogels, the CTO of Amazon, in 2016. This attitude dominates the DevOps approach.

Modern applications serve millions of requests from users across different geographical regions around the world. It’s a must that teams find an efficient way of distributing workloads across servers to maximize speed and capacity utilization.

In this post, you’ll learn how load balancing works in the cloud, specifically on Google Cloud Platform, and which load balancers are available for your use.

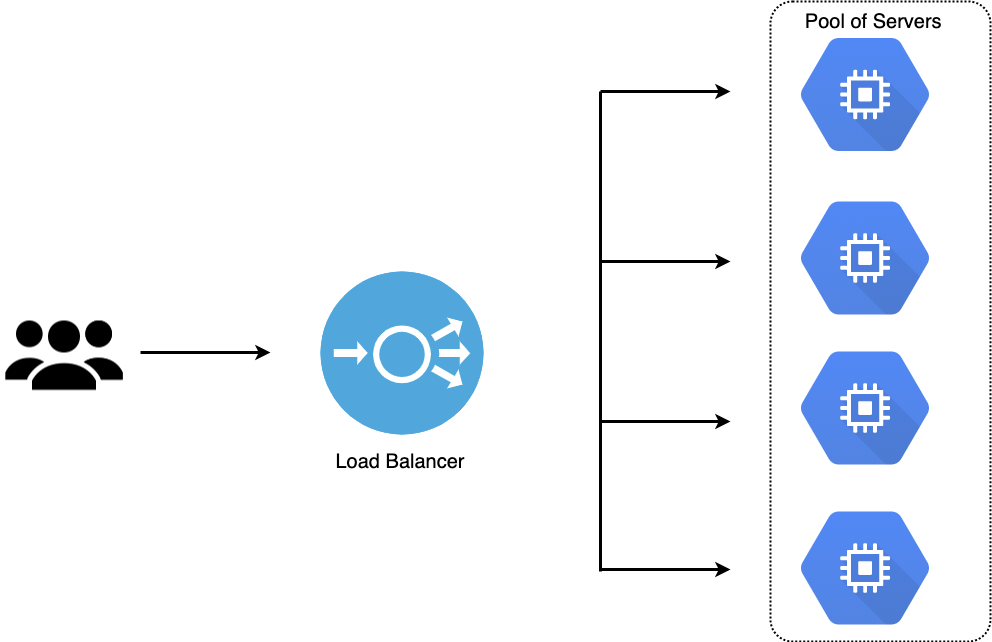

Figure 1: Simple example of load balancing

One of the main goals of load balancing in the cloud is to prevent servers from breaking down or getting overloaded.

Load balancers can either be hardware-based or software-based. Hardware-based load balancers are physical hardware and are essentially dedicated systems (boxes) whose main purpose is to balance the flow of information between servers. Conversely, software-based load balancers run on standard operating systems and hardware (PCs, desktops).

While hardware-based load balancers require rack-and-sack, proprietary appliances, the software-based balancers are installed on virtual machines and standard x86 servers.

Hardware-based load balancers typically require you to provide enough load balancers to meet increasing network traffic demands. By implication, several load balancers will have to remain idle until there’s an increase in network traffic, for example, on Black Friday.

Why Load Balancing

There is a limitation to the number of requests a single computer can handle at a given time. When faced with a sudden surge in requests, your application will load slowly, the network will time out, and your server will creak. You have two options: scale up or scale out.

When you scale up (vertical scale), you increase the capacity of a single machine by adding more storage (Disk) or processing power (RAM, CPU) to an existing single machine as needed on demand. But scaling up has a limit—you’ll get to a point where you cannot add more RAM or CPUs.

A better strategy is to scale out (horizontal scale), which involves the distribution of loads across as many servers as necessary to handle the workload. In this case, you can scale infinitely by adding more physical machines to an existing pool of resources.

The Benefits

The benefits of load balancing include the following:

Prevents Network Server Overload

When using load balancers in the cloud, you can distribute your workload among several servers, network units, data centers, and cloud providers. This lets you effectively prevent network server overload during traffic surges.

High Availability

The concept of high availability means that your entire system won’t be shut down whenever a system component goes down or fails. You can use load balancers to simply redirect requests to healthy nodes in the event that one fails.

Better Resource Utilization

Load balancing is centered around the principle of efficiently distributing workloads across data centers and through multiple resources, such as disks, servers, clusters, or computers. It maximizes throughput, optimizes the use of available resources, avoids overload of any single resource, and minimizes response time.

Prevent a Single Source of Failure

Load balancers are able to detect unhealthy nodes in your cluster through various algorithmic and health-checking techniques. In the event of failure, loads can be transferred to a different node without affecting your users, affording you the time to address the problem rather than treating it as an emergency.

Load Balancing on Google Cloud Platform (GCP)

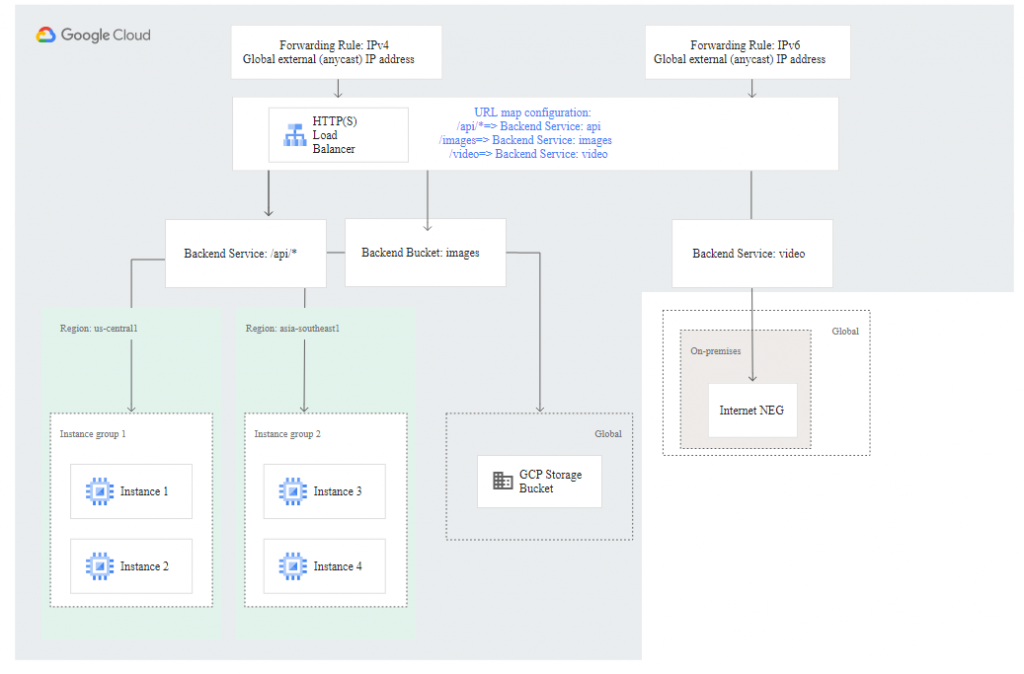

Figure 2: GCP Load Balancing, Source: Google Cloud Platform

Tech marketing professionals need SMEs, but don’t always have access to them. IOD solves this problem. Contact us to learn more.

One common question that organizations who chose Google Cloud Platform as their preferred cloud provider have is: What load balancer offerings are available on GCP to effectively balance their workload. In this section, I’ll answer this question with a deep-dive into load balancing on GCP.

Many high-traffic websites today serve hundreds (and even millions) of simultaneous requests from clients/users and return the correct application data, video, images, or text, all in a reliable and fast manner.

We usually get connection timeout errors and long wait times when an application backend can’t scale effectively as network traffic increases.

Logically, one may say that adding more backend servers would solve the traffic problem but efficient resource usage becomes the next issue one must deal with. Questions like how to efficiently distribute traffic to backend servers based on capacity and how to stop sending traffic to an unhealthy backend server must be answered.

This is where GCP load balancing comes into the picture.

Load balancers are managed services on GCP that distribute traffic across multiple instances of your application. GCP bears the burden of managing operational overhead and reduces the risk of having a non-functional, slow, or overburdened application.

Let’s look at the different types of load balancing on GCP.

Global Load Balancing (GLB)

Global load balancing (GLB) is a practice of distributing traffic across connected server resources that are located in different geographical regions, distributing your workload globally instead of having it in a single data center. Modern application users are distributed in nature, and the further your app is from your users, the longer it takes to transmit data back and forth. A worldwide pool of servers with a global load balancer ensures that your users can connect to servers that are geographically close to them.

Global load balancers offer enhanced traffic management and disaster recovery benefits to optimize the performance of an application. You can think of GLB services as systems that balance the load between scale units, endpoints, and application stamps, hosted across several geographies/regions. Unlike Azure and AWS, GCP global load balancers can be used to manage resources deployed across several regions without the need for network-peering configuration and a complex VPN.

Note: When you use GCP’s global load balancers, you should ensure that you use the premium tier of network service tiers.

Regional Load Balancing

Regional load balancing allows you to split your workload via a pool of servers located in the same region. Some businesses serve customers located in specific regions, perhaps a market niche specific to a particular country. But their workload may be more than what a single server can handle. Such businesses may have a fleet of servers located in a region close to their customers to cope with the workload.

This is where regional load balancers come into play. You can think of them as load balancers that balance the load between clusters, containers, or VMs within the same region.

External Load Balancing (ELB)

External load balancing supports Instance groups, Zonal network endpoint groups (NEGs), buckets in Cloud Storage, serverless NEGs, and internet NEGs. You can use ELB to support web, application, and database tier services.

External load balancing also supports content-based load balancing and cross-region load balancing, which you can configure via the Premium Tier. Both services will use different backend services, each with NEGs and backend instance groups in multiple regions. When you use the Premium Tier, the external load balancer will also advertise the same global external IP address from various points of presence and route traffic to the nearest Google Front End.

Internal Load Balancing (ILB)

With internal load balancing, you can run your applications behind an internal IP address and disperse HTTP/HTTPs traffic to your backend application hosted either on Google Kubernetes Engine (GKE) or Google Compute Engine (GCE).

The internal load balancer is a managed service that can only be accessed on an internal IP address and in the chosen region of your Virtual Private Cloud network. You can use it to route and balance load traffic to your virtual machines. At a high level, internal load balancers consist of one or more backend services to which the load balancer forwards traffic and an internal IP address to which clients send traffic.

Internal load balancers use a URL map and health checks to deliver the load balancing service. They serve as useful tools for improving legacy applications and support web applications and database tier services.

TCP/SSL Load Balancing

TCP/SSL load balancing allows you to distribute TCP traffic across a pool of VM instances located within a Compute Engine region. You can use the TCP/SSL load balancers to terminate user SSL (TSL) connections at the load balancing layer; the TCP or SSL protocols will balance the connections across your backend instances.

You can deploy TCP/SSL load balancers globally and use them for managing the overhead of non-HTTP(s) traffic and TCP traffic on specific and well-known ports. When you have multiple services in different regions, a TCP/SSL load balancer automatically detects the nearest location with capacity and directs your traffic accordingly. When you use TCP/SSL load balancers on GCP, you should ensure that you use the premium tier of Network Service Tiers; otherwise, the load balancing will become a regional service where all of your backends must be located in the region used by the load balancer’s forwarding rule and external IP address.

TCP Proxy Load Balancing

The TCP Proxy Load Balancing (TPLB) is a global balancing service suitable for uninterrupted non-HTTP traffic. It’s implemented on Google Front Ends (GFEs), which are globally distributed, and provides an error-checked and reliable stream of packets to IP addresses that cannot be easily corrupted or lost.

You can use the TCP proxy load balancer to terminate your customer TCP session and also forward traffic to your VM instances using SSL or TCP. These instances can be in various regions, and the balancer will direct traffic to the nearest region with the highest capacity. When you use TCP proxy load balancers, you can only have a single backend service, which can have backends in multiple regions. The components of TCP load balancers are forwarding rules/addresses and target proxies.

TCP load balancing is suitable to use whenever you’re dealing with TCP traffic and don’t need any SSL offload.

Creating Your First Load Balancer on GCP

In this section, you’ll learn how to create a load balancer on GCP.

For a production workload, you should manage and provision your resources through machine-readable code like terraform rather than through the web browser. But for the sake of keeping things simple, the load balancers used in this example will be provisioned from the GCP console.

Note: To access GCP, you need to have a google account. You can click this link to create one.

Let’s dive right in.

Step One: Create an Instance Group

- Log into the GCP dashboard, click “Compute Engine” and then “Instances Groups.”

- Once the Compute Engine is ready, click “Create Instance Group.”

- Enter the name and description, then select the zone (you can use either single or multiple zones) and region, create an instance template, and click “Create.”

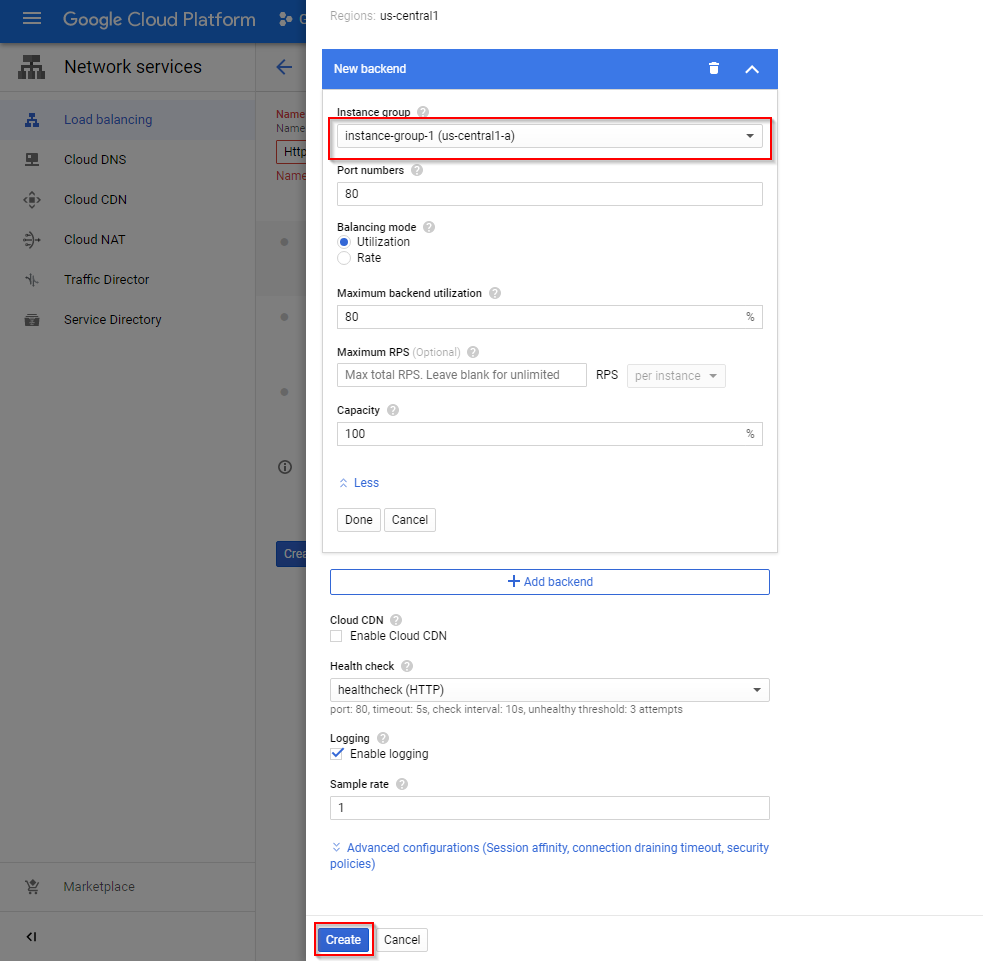

Step Two: Create an HTTP(s) Load Balancer

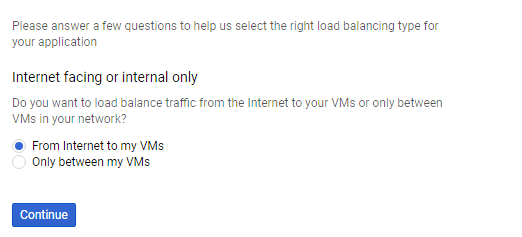

- To create the HTTP(s) load balancer, navigate to “Network Services” and click “Load balancing” and then “Create a Load Balancer.”

- Click the “Start Configuration” button situated under the HTTP(s) Load balancing option.

- Select the first option and click “Continue.” The HTTP(s) load balancer will manage web application traffic distribution from the internet to your Virtual Machines.

- Set up the Backend configuration and Frontend configuration.

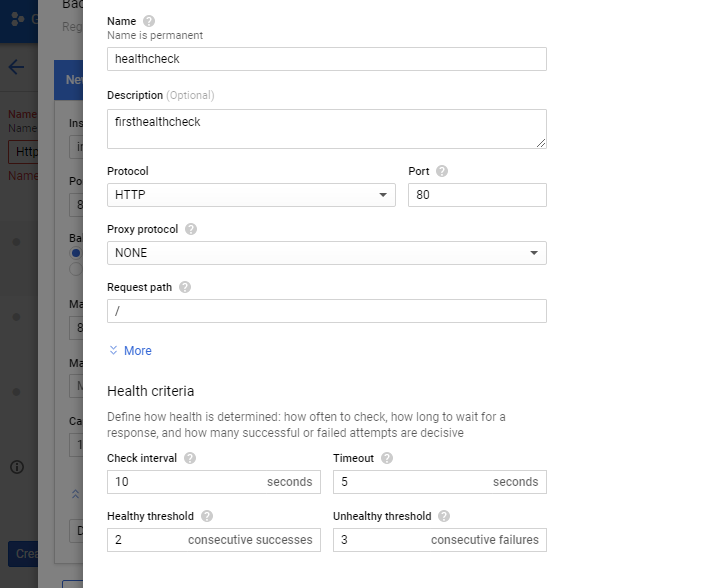

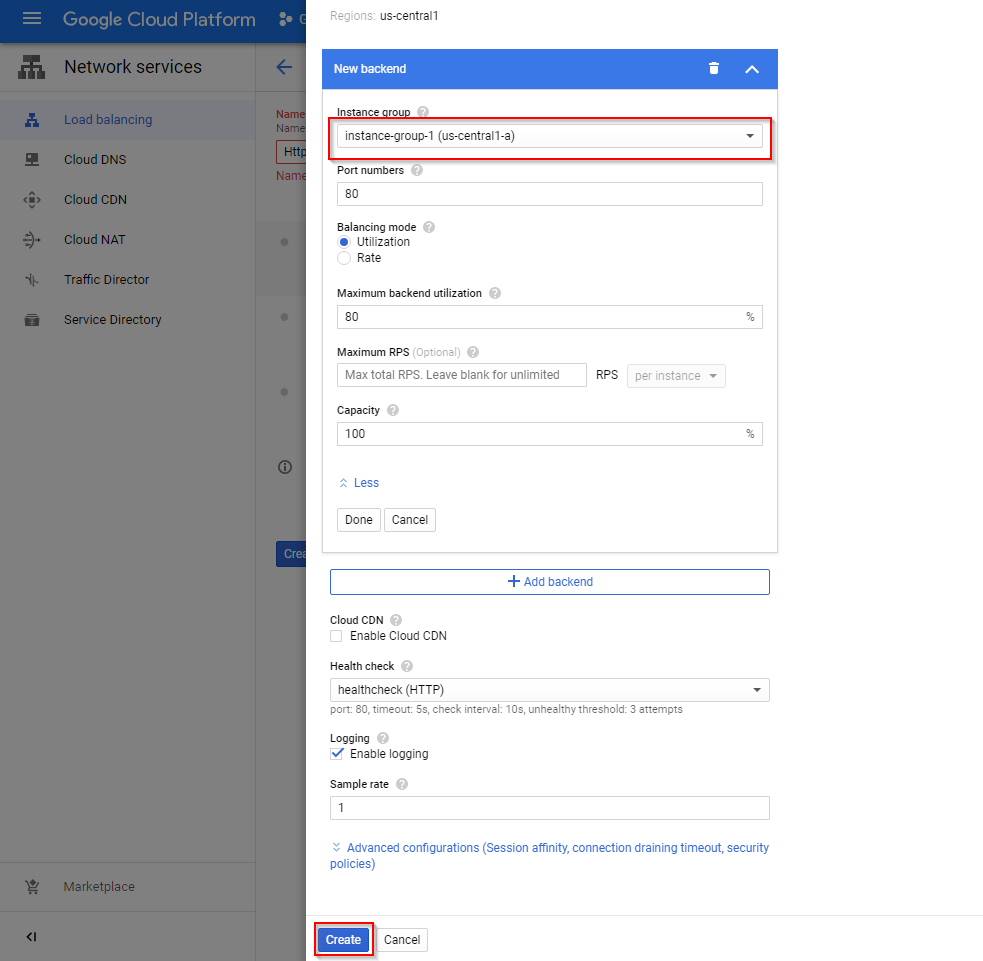

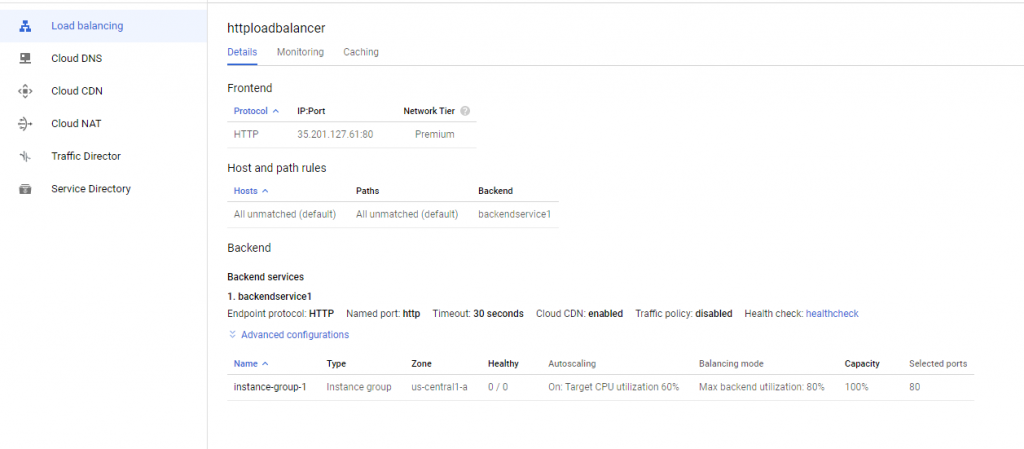

- Enter the load balancer name, select the backend configuration, fill in the necessary information, create a health check, and then create the backend service. The health check will help to ensure that the Compute Engine routes traffic only to healthy and existing instances. See images below:

- Click “Host and Path Rules,” and confirm that the newly created backend service is selected.

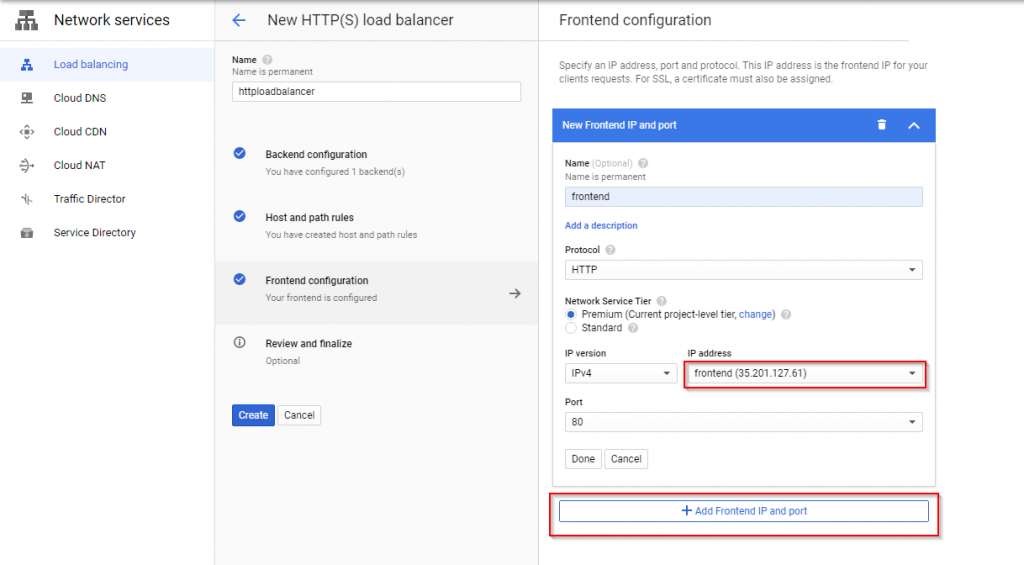

- To create a Frontend configuration, click “Frontend configuration,” enter the name, and click on the IP address box to reserve a new static IP address. After, click “Add Frontend IP and port.”

- Click the “Review and finalize” tab to confirm that everything checks out. If you’re cool with the configuration, then you can simply hit the “Create” button.

Congratulations, you’re now done creating your first load balancer on GCP.

Note: you can click on the newly created load balancer to view its details (as shown below):

Choosing the Right Load Balancer in GCP

When it comes to the subject of choosing the right load balancer, you need to consider what you want to achieve with it before making a decision. Below are some pointers:

- Use internal load balancers whenever you want to route and balance load traffic within your GCP network.

- External load balancers are an ideal choice if you’re distributing traffic from the internet to a Google Cloud network.

- Use global load balancers whenever your backend endpoints live in multiple regions and you want to distribute Layer-7 traffic to them. Global load balancers enable you to deploy capacity in multiple regions without adding a new load balancer address for new regions or modifying the DNS entries. They also allow for cross-region overflow and failover.

- Use regional load balancers whenever your backend endpoints live in a single region and you want to balance and distribute Layer-4 traffic coming into the data centers to this set of endpoints. Regional load balancers enable you to preserve the client IP address and also perform TLS termination at the backend instances.

- Use TCP load balancers whenever you want to distribute TCP traffic and don’t need SSL offload.

- Use SSL load balancers whenever you want to distribute TCP traffic and need SSL offload.