The benefits of migrating workloads between different cloud providers or between private and public clouds can only truly be redeemed with an understanding of the cloud business model and cloud workload management. It seems that cloud adoption has reached the phase where advanced cloud users are creating their own hybrid solutions or migrating between clouds while striving to achieve interoperability values within their systems. This article aims to answer some of the questions that arise when managing cloud workloads.

The benefits of migrating workloads between different cloud providers or between private and public clouds can only truly be redeemed with an understanding of the cloud business model and cloud workload management. It seems that cloud adoption has reached the phase where advanced cloud users are creating their own hybrid solutions or migrating between clouds while striving to achieve interoperability values within their systems. This article aims to answer some of the questions that arise when managing cloud workloads.

Q1: What is a cloud workload and cloud workload management?

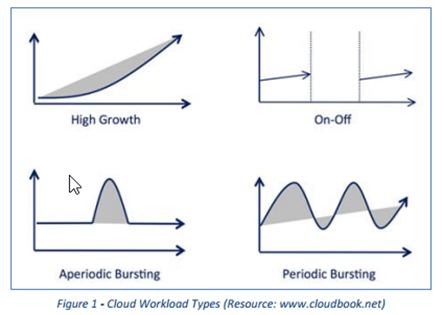

A cloud workload is dependent on the cloud layer (i.e. infrastructure, compute unit, storage unit, etc.). In infrastructure the workload is the compute or storage units that are being utilized by the cloud consumer during a period of time. In PaaS, the workload refers to the software stack processing efforts while in SaaS it refers to the usage and demand habits of the end user or system. One method of measuring workload throughput is by analyzing the utilization efficiency. Cloud workload management requires an understanding of resource demand in order to ensure efficient capacity utilization at all times. Additionally, it means having the visibility and tools to utilize fixed capacity for steady demand as well as the ability to burst on-demand peaks while aiming for ideal throughput of IT and cloud resources.

Q2: What are the cloud consumer’s considerations for switching between clouds?

Having had this conversation multiple times, I’ve discovered that there are many considerations that need to be kept in mind when contemplating switching between clouds. Workload migration, whether it is between private and public clouds or between cloud vendors, should be driven by the business values gained by the cloud user – reduced costs, enhanced security, improved availability, or decreased cloud vendor lock-in. Workload migration automation, or workload transportation rules, must be established based on full transparency of the dynamic cloud environment. Today, there is a rising debate on the feasibility of workload transportation between cloud vendors.

Q3: How does the perspective of the workload differ between the cloud vendor and its consumer?

From the vendor’s perspective, the cloud workload refers to the physical machine compute units that are utilized in a period of time (second, minute, hour). It is the vendor’s goal to eliminate idle physical capacity. Alternatively, the consumer’s workload refers to his/her utilization of the cloud compute utility in order to meet demand and terminate idle capacity. Essentially, both sides are aligned with the need to be efficient and to ensure that capacity meets demand while maintaining maximum throughput of resources at all times. A good example of this is Amazon Cloud spot instances that incentivize users to use lower priced resources at peak times and thus maximize the AWS underlying physical compute resources utilization.

Q4: What is Interoperability? Are there Cloud standards?

Cloud interoperability is the ability to use the same tools, process, and compute images on a variety of computing providers and platforms. For the ability to migrate cloud workloads between environments or between clouds there must be common concepts across the board, all the way to the actual API standards that enable and facilitate cloud integrations.

“…the Open Data Center Alliance (ODCA), a global member-led organization, has brought together hundreds of end user enterprise IT organizations to work together in an attempt to drive broad scale requirements for the enterprise-ready cloud. The group recently announced the release of a new POC (Proof-of-Concept) paper developed to determine where the virtual machine (VM) industry currently stands in meeting interoperability requirements outlined in an ODCA VM interoperability usage model.” Resource: Forbes.com

Q5: What’s the degree of cloud vendor lock-in?

Cloud vendor lock-in is a significant consideration when adopting a specific cloud vendor or platform. Vendor lock-in is dependent on assumed costs of switching clouds. Taking that into consideration, the discussion should compare traditional data center “lock-in” with public or private cloud vendor “lock-in”. Based on this, it seems that public cloud consumers prefer not to be locked-in simply because there is no initial hardware investment. Heavy reliance on proprietary APIs, however, can create greater lock-in that drives costs up and undermines the savings that can be achieved through portability and technical efficiency. Past experiences and the new cloud perception has led experienced IT leaders to better measure their vendor’s lock-in level. Vendors that don’t lock-in their customers may be perceived as a better option for organizations that are considering cross-cloud deployment.

To learn more about managing cloud workloads, sign up for the live webinar, Managing Cloud Workloads: Back to Basics with Joe Weinman of Cloudonomics and Patrick Pushor of CloudChronicle.com on Thursday, May 16th at 12pm EST.