Just like virtual machines when they first burst onto the scene, containers have created quite a wide-eyed response as a possible replacement for virtual machines to host your applications.

VMware GSX was the first virtualization platform I worked on. I remember being very skeptical back then about deviating from my mindset of one application per physical server. Little did I know that the technology I was dabbling in then would change the way I work with servers for years to come.

And now? Virtualization has become the way of life for every IT team! Or should I say it was…until containers came along?

Compared to virtual machines–where a physical server’s resources are sliced and diced and a hypervisor layer exists in between to manage access to those resources–containers make life simpler by isolating the processes and binaries associated with an application.

Containers use the concept of OS layer virtualization, where containers share the underlying operating system and a set of binaries. The process isolation and resource management are done at kernel level, thereby improving efficiency and agility. Containers fit perfectly into microservices-based architecture where each component of the application is developed, managed, and deployed independently on lightweight hosting platforms.

A Short History of Containers

Sure, Docker was the first popular container platform. But the concept of containers can actually be traced back to “Chroot,” which used file system isolation, released by Bell Labs in 1972 for Unix platforms. The term “container” was first used in 2005, though, when Solaris containers were released. FreeBSD jails, Linux Container (LXC), OpenVZ, and Linux-Vserver are some of the earlier players who dabbled with containerization.

The initial container platforms were quite complex with limited mobility as they were dependent on the system configuration to a great extent. Containers eventually gained traction with the advent of Docker, which provided an encapsulation platform for application and its dependencies in an easy-to-ship format that will run on any compatible OS where the docker platform is available. Originally, containers leveraged Linux features such as Kernel namespaces, SElinux, Seccomp, Chroots, and control groups to run containers in isolation. It took a bit longer to bring containers to Windows, and Docker support was announced along with the general availability of Windows Server 2016.

Are you a tech blogger?

Containers and Cloud Platforms

Now that containers are mainstream, cloud service providers like Azure, AWS, and Google have been very quick to endorse this “new kid on the block.” AWS started supporting containers in 2015 with its Elastic Container Service. Google pioneered the container orchestration platform workstream with its very popular Kubernetes offering. With the shift in Microsoft’s strategy to embrace all things open-source, Azure also now offers multiple popular services that help the customer host their containers. Let’s explore some of those options and how one of them might be the technology you were looking for to get started with containers in Azure.

Run Independent Containers with Azure Container Instances

Azure Container Instances (ACI) offer the simplest option for deploying containers in Azure without the overhead of any higher-level service. You can deploy independent containers using ACI without having to manage any virtual machines in the backend. Applications deployed in containers can be accessed directly over the internet through a public IP address or Azure provided FQDN. (You can also secure ACI deployments to a virtual network for private access of the applications, but this feature is currently in preview.)

ACIs are deployed securely, so that your application runs in isolation, similar to how it would run in a virtual machine. ACI supports deployment of both Windows and Linux containers. Containers are deployed in container groups which are hosted on the same machine sharing the same network, lifecycle, and storage. Containers also are exposed using the same IP address and DNS name label. Currently, such multi-container deployments are supported only for Linux, though. They are useful for when you want to deploy inter-related functionalities of an application in different containers, say application interface and logging component. Users have the option to choose required compute capacity while deploying container instances. GPU-enabled ACI instances are also available in preview and can be explored by the customer with compute-intensive use cases such as Machine Learning.

Container Orchestration Using Azure Kubernetes Service (AKS)

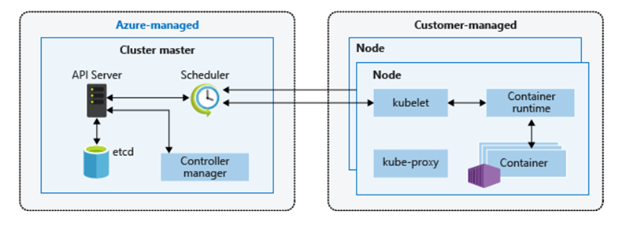

Kubernetes is the container orchestration platform developed by Google, and aids production scale deployment of containers. It takes care of challenges such as high availability, scalability, and security of applications running in containers to make them enterprise-ready. With AKS, Azure provides a managed Kubernetes cluster service where the management plane of the cluster is deployed and owned by the platform. Users just need to manage the agent nodes of the cluster and the containers deployed in them.

The cluster master nodes that enable the core Kubernetes services and orchestration of workloads are managed by Azure platform. The cluster nodes where the application workloads are eventually deployed, however, is managed by customer. AKS uses Docker as runtime to run your application containers. AKS uses the concept of “pods,” a single instance of the application in the cluster and often has a 1:1 mapping with a container.

Let’s look at some of the features that make AKS an attractive solution for enterprise customers who want to deploy containers in Azure

- AKS can also be integrated with Azure Active Directory to implement Role-based Access Control to cluster resources. Users can log in using the same identity that they use to access other Azure resources.

- AKS offers a seamless upgrade to the latest Kubernetes version directly from the portal or through CLI. The applications deployed in the cluster are gracefully drained to ensure minimal downtimes during the upgrades.

- It supports GPU-enabled agent nodes for deploying compute intensive graphics and visualization workloads.

- AKS can be integrated with an Azure monitor and log analytics to leverage out-of-the-box container and cluster monitoring capabilities.

- Supports advanced networking capabilities using the Azure Container Networking Interface (CNI) plugin designed to help securely connect the application pods to other Azure resources and services.

- Customers can opt for a multi-cluster deployment architecture of AKS, with traffic manager to redirect traffic based on user geo-location proximity, and cluster response time.

Container Image Management with Azure Container Registry

Azure Container Registry (ACR) is a fully managed private Docker registry that can be used to store your container images for your on-premises container framework, Kubernetes clusters, and Docker Swarm. It also can be used for container deployments to Azure services like AKS, ACI, App Service, and Service Fabric.

An ACR can have multiple repositories to store groups of docker compatible container images. These images can be either Windows- or Linux-based and can be managed using standard docker commands. ACR can also be used to store Helm charts, a packaging format used to deploy applications to Kubernetes. ACR can be easily integrated with DevOps tools like Azure DevOps and Jenkins to automatically update your container images whenever the application code is modified.

Other Deployment Options

While Azure Container Instances, ACR, and AKS remain the primary containers-focused services in Azure, there are a few other services to be explored depending on the customer use case.

- Azure Webapp supports deployment of Windows and Linux containers by leveraging images in Docker or other private registries.

- App Service environment supports windows container deployment in public preview.

- Azure Batch can be used to run large scale parallel and HPC batch computing jobs in Docker-compatible containers in a backend Batch pool.

- Azure Service Fabric offers another container orchestration platform for deploying your Windows and Linux containers in Azure targeting Microservices architecture.

Final Note

As you can now see, Azure offers a full range of services to support your container deployment needs in the cloud.

Modern day applications are moving towards architectures with minimal infrastructure management requirements, and a combination of containers and cloud fits the bill perfectly. For organizations to stay ahead, containers cannot be ignored. Go ahead and get your hands dirty with containers in Azure and yes, you can thank me later!