When AWS introduced its Titan language models in 2023, the move made sense from a strategic perspective. As a cloud provider supporting third-party LLMs through its Amazon Bedrock platform, the cloud giant needed its own proprietary models—if only to offer customers a native, secure, and privacy-compliant option. But Titan never became a meaningful player in the GenAI market.

Despite Amazon’s reputation for scale and engineering, Titan was perceived as a conservative release, lacking the innovation seen in models from OpenAI or Google. Specifically, Titan models struggled with more complex reasoning tasks and creative generation capabilities—areas that had rapidly become industry standards for enterprise-grade AI applications.

Throughout 2023 and 2024, updates to Titan trickled in—improved fine-tuning tools, better performance on retrieval-augmented generation (RAG) tasks, and enhanced responsible AI guardrails—but Titan continued to lag behind leading models in benchmarks, adoption, and industry mindshare. It lacked the accuracy of GPT-4, the alignment of Claude, and the multimodality of Gemini.

This context is critical to understanding the significance of Amazon Nova, launched at AWS re:Invent 2024.

Where Titan aimed for safe adequacy, Nova reflects a decisive pivot toward competitiveness and scale. Nova represents AWS’s recognition that to stay relevant in the GenAI landscape, it needed to invest in cutting-edge model capabilities while leveraging its core strengths in enterprise infrastructure, integration, and security.

Rather than serving as a generic alternative, Nova was positioned as a new generation of purpose-built, multimodal AI models tailored for AWS-native enterprises.

From Foundation to Frontier

Amazon’s journey into generative AI (GenAI) has been marked by cautious progression. Beginning with traditional machine learning tools like SageMaker in 2017, AWS focused on empowering ML experts rather than building accessible GenAI tools for a broader audience.

For years, Amazon seemed content to let others race ahead. Even as it hosted models from AI21 Labs, Anthropic, Cohere, Stability AI, and Meta through Bedrock, its own presence in the GenAI arena was muted—marked by incremental steps rather than bold leaps.

The path leading to Nova unfolded as a series of cautious moves:

- Foundations (2017): The launch of SageMaker marked AWS’s early investment in ML, focused on classical machine learning rather than next-generation AI.

- Foundations (2017): The launch of SageMaker marked AWS’s early investment in ML, focused on classical machine learning rather than next-generation AI.

- Gap years (2017–2023): A notable absence of GenAI innovation. SageMaker remained powerful but complex, limiting adoption among developers and business users without deep ML expertise.

- Gap years (2017–2023): A notable absence of GenAI innovation. SageMaker remained powerful but complex, limiting adoption among developers and business users without deep ML expertise.

- Bedrock + Titan (2023): Bedrock introduced managed access to foundation models. This included Amazon Titan, which emphasized responsible AI and AWS-native integration but fell short of the performance and innovation seen in OpenAI, Anthropic, or Google’s offerings.

- Bedrock + Titan (2023): Bedrock introduced managed access to foundation models. This included Amazon Titan, which emphasized responsible AI and AWS-native integration but fell short of the performance and innovation seen in OpenAI, Anthropic, or Google’s offerings.

- Amazon Q (2023): AWS’s GenAI assistant aimed at task-specific use cases—developer help, business automation, contact centers—leveraging the best models available within Bedrock, and later incorporating Nova.

- Amazon Q (2023): AWS’s GenAI assistant aimed at task-specific use cases—developer help, business automation, contact centers—leveraging the best models available within Bedrock, and later incorporating Nova.

- Titan Struggles (Early–Mid 2024): Despite ongoing improvements—better RAG performance, fine-tuning capabilities, agentic tools—Titan struggled to capture meaningful market share.

- Titan Struggles (Early–Mid 2024): Despite ongoing improvements—better RAG performance, fine-tuning capabilities, agentic tools—Titan struggled to capture meaningful market share.

- Nova (Dec 2024): The Nova family debuted at re:Invent 2024, delivering multimodal capabilities, competitive benchmarks, enterprise-grade scalability, and aggressive pricing.

In June 2024, Adam Selipsky stepped down as AWS CEO, and Matt Garman, a longtime AWS executive, took the helm.

Garman’s appointment signaled a renewed focus on accelerating innovation in the GenAI space. He emphasized the importance of AWS being at the cutting edge of every technological advancement, acknowledging the need to match the rapid pace set by competitors.

Under Garman’s leadership, AWS has intensified its investment in AI, including an $8 billion commitment to AI startup Anthropic and the development of its own AI chips, such as Trainium, to enhance performance and cost-efficiency .

With Nova, Amazon signaled a strategic shift: moving beyond simply enabling AI access to actively building a competitive ecosystem of proprietary models and developer tools. The Nova family launched under a shared architecture, offering a range of models designed for different performance and cost trade-offs—spanning use cases from fast text generation to high-resolution video rendering.

The Nova Family

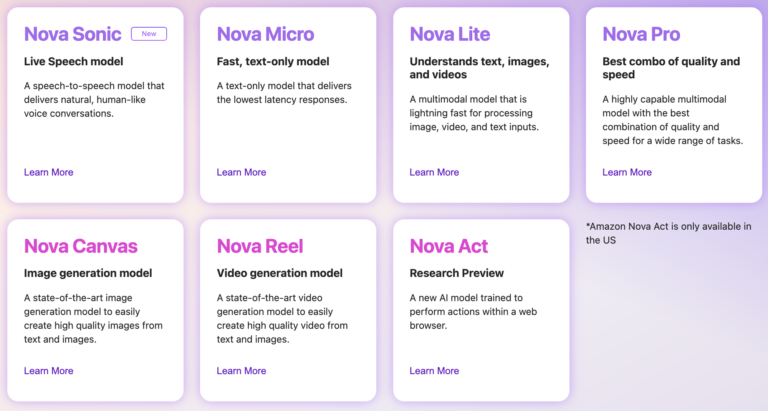

Since its launch in December 2024, Amazon has steadily expanded the Nova family, signaling a deeper commitment to building a differentiated enterprise AI ecosystem. Nova models are designed along a performance spectrum optimized for varying throughput, latency, and multimodal needs.

All Nova models—except Micro—support multimodal inputs across text, images, and in some cases video.

This mirrors a broader industry shift toward multimodality, seen across providers like OpenAI and Google, though AWS emphasizes a continuum of specialized models rather than a single generalist.

Access to Nova is exclusive to Amazon Bedrock. There is no standalone API, no self-hosting option, and no external deployment path—raising concerns around portability for organizations pursuing multi-cloud strategies. Still, deep native integration often outweighs portability concerns, especially for complex GenAI systems where replicating infrastructure elsewhere is more theoretical than practical.

At the end of April 2025, AWS also introduced Nova Premier, its most advanced “understanding model” to date. Designed for complex, multi-step tasks, Nova Premier supports multimodal inputs—including text, images, and video. It can also process up to one million tokens in a single input.

According to AWS, the model can play a supervisory role in multi-agent systems, orchestrating specialized agents to execute structured workflows (e.g., financial research or enterprise task automation). It also acts as a teacher model, distilling knowledge into smaller Nova variants optimized for latency and cost. Delivered exclusively through Amazon Bedrock, Nova Premier is positioned as both a high-capacity inference model and the architectural foundation for scalable, cost-aware GenAI deployments.

Next

In April, AWS rolled out nova.amazon.com, a new portal that lets customers explore the full Nova lineup. Although the site is currently geofenced to U.S. users, the launch clearly signals AWS’s push to raise Nova’s profile and drive broader adoption worldwide.

AWS also upped the stakes with Amazon Nova Act—an agent-framework positioned to rival OpenAI’s Operator and Anthropic’s Claude agents.

Stay tuned for Parts 2 and 3 of this series, where we dive into Nova Act’s architecture and the broader value proposition Nova offers to enterprises already anchored on AWS.

We help tech brands produce content that speaks to decision-makers—and drives real results. Grow with IOD.

*Disclaimer: This article was created by Ofir Nachmani, IOD GenAI expert with the use of ChatGPT deep research.