Machine learning (ML) is, in essence, a concept: predicting patterns, and classifying and clustering data sets in supervised and unsupervised ways. The execution of ML algorithms includes both the processing of data sets and the robotics that deal with real-world information.

More broadly, ML is a huge step forward in how computers can learn in a human-like way without explicit pre-programming.

Today, the Artificial Intelligence systems that are taking advantage of Machine Learning have been creating headlines around the globe.

“It is a renaissance, it is a golden age,” said Amazon founder Jeff Bezos. “We are solving problems with machine learning and artificial intelligence that were in the realm of science fiction for the last several decades. Natural language understanding, machine vision problems, it really is an amazing renaissance.”

The world will soon begin to see the kind of technological innovation which until now has only been possible in science fiction. This article will remove the “fiction” that Bezos speaks of above and leave only the science itself–dissecting the structure of ML into the three layers that compose it.

Before we dive into the three layers of ML, let’s first take a look at what ML is and how it differs from artificial intelligence and deep learning.

Artificial Intelligence vs. Machine Learning vs. Deep Learning

You might have often seen Artificial Intelligence and Machine Learning used interchangeably. However, there are subtle differences between the two. Artificial Intelligence is a broader concept that takes advantage of computers that execute tasks based on algorithms.

Machine Learning is a subset of Artificial Intelligence that is concerned with the ability of computer systems to learn by themselves from a set of data without being explicitly programmed. Here below are the three distinct steps that take place in machine learning:

- Start with some data.

- Train a model on that data.

- Take advantage of the trained model to make predictions on new data.

Deep Learning is yet another concept, a subset of Machine Learning, that deals with deep neural networks. While Machine Learning takes advantage of algorithms to parse data, learn, train models out of that data, and make decisions accordingly, Deep Learning creates artificial neural networks to make intelligent decisions on its own. Neural networks have various deep layers, hence the name. You can take advantage of Deep Learning to solve even complex problems using a diverse, unstructured data set.

The ML Ecosystem: The Three Layers of ML

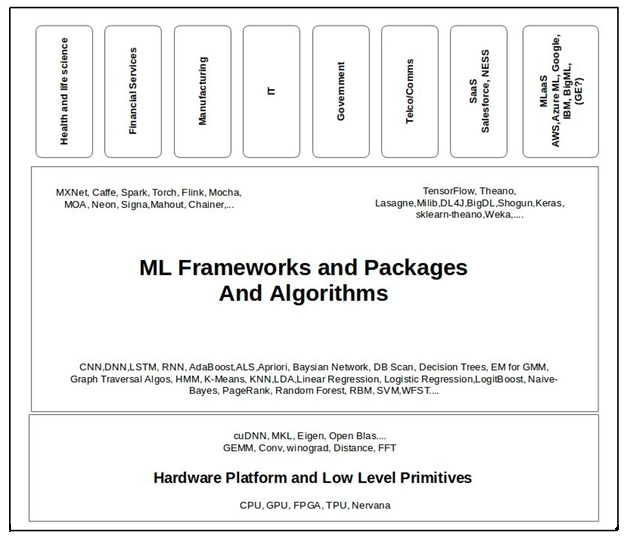

ML is structured into three layers as depicted in Figure 1 below.

Starting at the bottom, these layers can be described as follows:

- Hardware and Low-Level Primitives: Powerful machines and database technologies that can crunch large data sets with almost unlimited CPU power.

- The Application Layer: Ease-of-use ML solutions that are tailored to solve real-world problems in various industry sectors.

- ML Frameworks and Packages: ML algorithms built by employing frameworks such as TensorFlow which can be distributed to various domain experts at the next layer. This layer also includes ML platforms, which have been introduced by the giant public cloud vendors over the last few years.

Let’s explore each layer in depth, starting with the underlying compute power and working our way up to the level of real-world application.

Layer 1: The Hardware Platform and Low-Level Primitives

Powerful machines are a key factor when it comes to the performance and cost of crunching large data sets. The algorithms developed are iterative in nature, which requires continuous learning and simulations; this in turn creates the need for an elastic and robust IT infrastructure. Furthermore, current state-of-the-art techniques, such as deep learning algorithms, require large computational resources.

Hardware vendors like Intel have responded to this challenge with the development of high-performing machines. Last year, Intel acquired Habana Labs to broaden its AI portfolio with solutions that span from the data center all the way to the intelligent edge. They also released their Altera FPGA, which combines CPUs with re-programmable FPGA processors, is already seeing some success.

GPUs Arrive on the Scene

Another key player here is Nvidia. Known for its graphic cards for gaming, it also produces GPUs used in large data centers for scientific and business analysis. The backend operations used in video games, such as matrix calculations or parallel calculations of relatively simple equations, are also extremely useful in calculating ML algorithms, for instance in biology. GPUs are even used in training neural networks–simulations of the human brain, which form the basis of modern ML.

In the past, the hardware design of these GPUs was made for graphics computations, not AI. But things are changing, fast. Nvidia just announced their next-gen GPUs, P4 and P40, which are targeted at deep learning training and inference. Companies like Graphcore are designing new GPUs called IPUs (Intelligent Processing Units), which specifically have AI processing as their primary function. Similarities between processing video game data and ML data have given Nvidia a big push in the market, and Nvidia’s GPUs are now a standard part of Amazon and Microsoft Infrastructure-as-a-Service (IaaS) cloud offerings.

These machines and cloud elastic tiers, in particular the IaaS model, have provided the compute and memory resources necessary to crunch huge amounts of data, greatly reducing ML algorithm training time: What took several weeks running in a traditional data center now takes a few hours to run in the cloud.

Low-Level Partners

Another side of this equation involves low-level software libraries that work directly with GPUs, such as NVIDIA cuDNN or Intel® Math Kernel Library, which have significantly increased GPU ML processing speed. This advantage of speed is also present, to some extent, after integrating the same code to a CPU, as shown by Intel® MKL.

Creating libraries such as cuDNN and MKL and integrating them into frameworks makes for higher hardware utilization and information extraction, without the need for senior software engineers. This means that for hardware to work well, you need proficient software engineers and database architects, but not necessarily mathematicians.

Layer 2: The ML Framework and Packages

For many years, ML was the exclusive field of statisticians with many years of university training and experience in academic analysis. But the field is now changing due to the large amount of publicly available data and the accessibility of robust infrastructures such as AWS.

ML frameworks and packages are developed by ML engineers, who collaborate with data scientists to understand the theoretical methods and business aspects of a specific field.

Data scientists work in conjunction with domain experts to conduct research to find innovative ideas for their ML projects. They will typically use programming tools such as MATLAB, high-level languages such as Python and R, and existing open-source frameworks (e.g., Accord.NET, Apache SINGA, Caffe, H2O, MOA, Spark’s MLlib, mlpack and scikit-learn) to test and implement their ideas and perform analysis on real-world data sets. While these present the core functionality of an algorithm, it’s the ML engineer’s responsibility to make it usable, ensuring the developed framework is efficient, reliable, and scalable.

ML-as-a-Service

In addition, over the last few years, global IT giants including Amazon and Google have presented their own offerings, which you could call “ML-as-a-Service.” These giant cloud infrastructure vendors see the opportunity and are pushing towards ML consumerization. You can easily integrate services such as Amazon Machine Learning with large, cloud-based data stores such as Amazon Redshift.

The real value here is that users of an IaaS get those baked-in ML capabilities, avoiding the huge investment in infrastructure required to build their own complete ML solution. This allows mainstream individual software engineers to focus on innovating.

Layer 3: The Applications

ML algorithms are applied to spam filters, web searches, ad placements, recommendation systems, credit scoring, stock trading, fraud detection, cloud computing, and state-of-the-art technologies such as driverless cars.

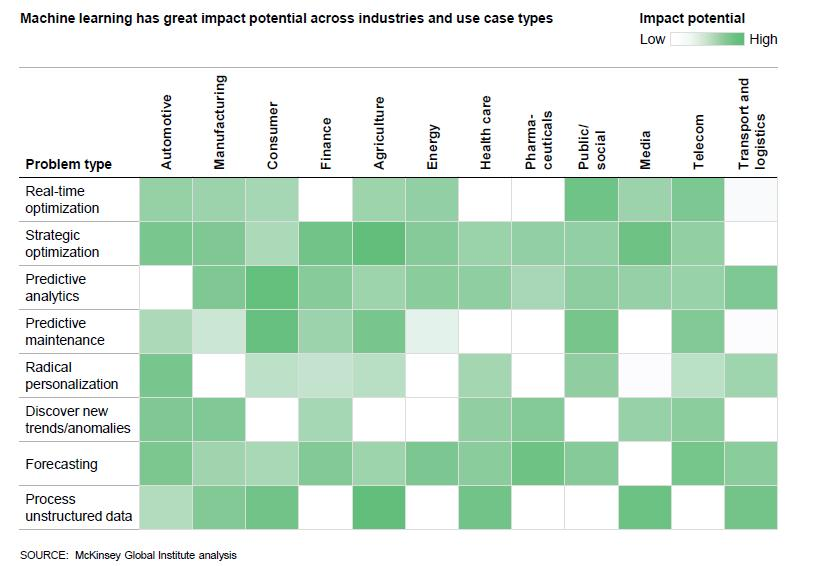

Last year, the McKinsey Global Institute came out with their report, “The age of analytics: Competing in a data-driven world,” which featured a heatmap showing the potential impact of ML across industries and use-case types.

Chatbots and Shopping

Chatbots are one particularly widespread use case that has taken off in recent years, projected to reach a market size of $9.4 billion by 2024. Adopted by some major tech giants, including Facebook, WhatsApp, Messenger, and Slack, chatbots rely on sophisticated machine learning algorithms to extract content and contextual meaning from conversations. This type of solution continues to evolve and develop its ability to mimic actual human agents.

Another example of how ML is being applied is in personalization and localization. Using ML to optimize systems for personal input, such as voice or behavioral habits, can have a myriad of benefits, such as to create deeper user connections with a brand. Amazon’s Echo, for instance, is fed with data from Alexa, Amazon’s bot. Alexa has access to users’ shopping histories, payment preferences, and delivery details and presents users with recommendations for future products based on their presumed needs and personalities. Another example of this is Salesforce’s Einstein.

Industry 4.0

With the Industry 4.0 revolution underway, the automotive and manufacturing industries are also increasingly adopting ML to smarten up their factory floors. When applied to data on the production line, ML can provide invaluable insights to increase productivity and identify–and even predict–failure.

OptimalPlus (disclaimer: at the time of publication, OptimalPlus is an IOD customer) brings these capabilities to both the semiconductor and automotive sectors with an end-to-end ML infrastructure that can be rolled out within minutes to any location, identifying outlier parts in real time and preventing them from shipping. Automotive companies using this software can, for example, extract failure signatures from field-failures and even recall incidents to prevent that type of failure from ever happening again.

Healthcare

Last but not least, ML can be used in the healthcare industry, as it can cater to a broad range of medical stakeholders, such as hospitals, medical practices, laboratories, pharmaceutical companies, and patients. ML can be applied to virtually any form of medical data, facilitating drug discovery and development, predictive and personalized medicine, diagnostic tests, management of chronic illness, and more. The Israeli startup Zebra Medical uses ML to identify abnormalities in medical scans, applying machine learning to automate image diagnostics in radiology departments.

Machine Learning: The Future Ahead

It’s important to understand that the three layers outlined above make up an ML ecosystem. Different companies often choose a specific layer in which to invest resources, deciding that expertise gained in that one layer will lead to their desired results. But this strategy begs the question: Wouldn’t it be better to invest in all three layers at once? Instead of focusing on just delivering the primitives, or developing the framework or application end, couldn’t it be optimal to approach all three using one streamlined product or service?

Google is a good example of a company that went all the way: They developed TPU, a hardware accelerator for deep learning, while also creating the most popular ML framework, TensorFlow, thought of by some as the “winner” against Caffe; and, of course, then there are Google’s applications, from their search engine to others deep in the ML world.

Machine Learning is still in its infancy when compared to other technology fields. But breaking it down offers us a new perspective on how and why it will continue to disrupt. ML has been experiencing exponential growth, and with the integration of quantum computing, a glorious future awaits. Soon, you’ll be able to achieve even faster processing, accelerated learning, and increased capabilities, no matter what sector you’re in.