Do you remember your first ChatGPT question? Mine was: “Write a marketing email for our product launch.” But the result was pure corporate boilerplate, generic phrasing, and zero personality. It sounded like every announcement email I’d ever deleted without a second thought.

And yet, I was still amazed. Here was an AI tool producing copy in seconds. Just not anything I’d ever want to send to our clients.

Over the past two years, as part of my research at IOD GenAI Labs, I’ve been digging deep into the world of GenAI; advancing my prompts; building and testing custom GPT assistants; and together with the team, trying to quantify the ROI on content creation based on GenAI.

What I discovered was eye-opening: The gap between AI’s potential and what most of us actually get from it rarely comes down to the technology itself. It comes down to how we ask.

Ever get frustrated with GenAI because it just doesn’t do what you need, despite all the hype about how advanced it’s supposed to be?

Here’s the GenAI trap I see a lot of teams fall into—including my own, in the early days:

We expect these AI models to read our minds, to instantly grasp every nuance of our business and deliver perfect, on-brand results with just a couple of lines of input. But AI isn’t magic. If we treat it like a shortcut for thinking, we’re setting ourselves up for disappointment.

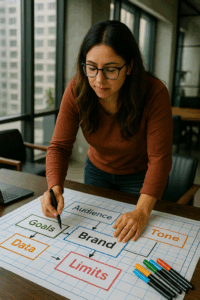

What’s actually needed is a systematic approach. Structured prompting, built on real marketing and technical context, completely changes the game. Once we started applying frameworks (clear context, brand guidelines, specific objectives), the difference was dramatic. The output improved, the alignment with our strategy sharpened, and we spent far less time fixing bland, off-target drafts. Bottom line: the more effort you invest up front in guiding GenAI, the more value (and time) you get back.

In this guide, we’ll explore frameworks and techniques to help you turn vague prompt instructions into precise AI interactions. We’ll also show how you can leverage AWS’s best practices for prompting to get professional-grade results with Amazon Bedrock, every time.

What Is Prompt Engineering?

Prompt engineering is the structured process of crafting and refining written instructions to direct AI models in producing targeted, high-value results. It involves selecting appropriate words, phrases, sentences, punctuation, and formatting to effectively communicate requirements and expectations to large language models.

Think of prompt engineering as the difference between giving someone vague directions versus providing a detailed roadmap. Consider these two approaches:

Vague approach: Create social media content about our new product.

Engineered approach: Create three LinkedIn posts announcing our new project management software launch. Each post should target different personas: IT directors, project managers, and C-suite executives. Use professional tone, include key benefits (30% faster project delivery, real-time collaboration, budget tracking), and end with a call-to-action to schedule a demo. Keep each post under 150 words with relevant hashtags.

When we take the time to design our prompts thoughtfully—choosing the right words, adding context, and clarifying outputs—we’re not just giving instructions; we’re setting the stage for AI to perform reliably. Clear prompts help the AI understand exactly what we expect, which means fewer mistakes, more relevant responses, and outputs that actually hit the mark.

How Context Reduces AI Hallucinations

One of the greatest benefits of a structured approach to prompt engineering is that it can help reduce hallucinations, i.e., when the GenAI model creates outputs that are not grounded in facts. That’s because when we provide context, the AI has less room to guess or invent information.

What do I mean by context? Any background information, instructions, and boundaries that we provide to AI that guide it to produce accurate, relevant, and on-brand responses.

As such, strategic context implementation in your prompts works by:

- Grounding responses in specific information rather than allowing models to draw from potentially outdated or irrelevant training data

- Establishing clear boundaries for what should and shouldn’t be included in responses

- Providing reference materials that models can cite in place of relying on pre-training knowledge alone

- Setting explicit expectations about accuracy standards, tone requirements, and information sources

Consider this transformation showing how context dramatically improves reliability:

Vague approach: Write an email campaign about our software.

Context-rich engineering approach:

[Context] You are a senior email marketing specialist at a B2B SaaS company launching a new customer support automation platform.

[Objective] Create a welcome email sequence for free trial users that nurtures them toward paid conversion. Highlight ease of setup, integration capabilities, and ROI potential.

[Scope] Deliver three emails: Day 1 (welcome + setup guide), Day 7 (feature spotlight), Day 12 (conversion offer).

[Tone]: Use a professional yet friendly tone, highlighting practical benefits and time-saving features.

[Audience] Tailor your content to small business owners and customer service managers who signed up for our 14-day trial.

[Response format & constraints] Each email should contain 200-250 words with clear CTAs. Avoid technical jargon, include social proof, and comply with CAN-SPAM requirements.

The context-rich version eliminates ambiguity about role, scope, audience, brand requirements, and deliverable specifications, dramatically reducing the likelihood of irrelevant content or off-brand messaging.

Beyond CO-STAR: Advanced Prompt Design Techniques

The example above uses the CO-STAR framework, one of the first standardized structures for prompt engineering. More advanced techniques are now leveraged to enhance reasoning capabilities and output reliability. Three must-knows are:

- Chain-of-thought (COT) prompting unlocks complex reasoning capabilities by leading the model through a sequence of intermediate steps. Instead of expecting the AI to jump directly to conclusions, CoT encourages step-by-step thinking that mirrors human problem-solving processes.

- Few-shot learning provides examples in the prompt itself to steer the model toward better performance. By showing the AI what good outputs look like, you create a template for consistent results.

- Prompt chaining divides complex tasks into a series of simpler sub-tasks, feeding each into the model separately for more reliable results. This technique is particularly powerful when working with complex workflows that require multiple types of analysis or different skill sets.

All of these techniques are discussed in detail in AWS’s guide to advanced prompt engineering, complete with examples and best practices.

Moving from Theory to Practice with Prompting (in My Experience)

For me, using GenAI effectively has been all about hands-on trial and error. I’ve experimented with different prompt structures, added layers of context, and learned how much detail actually matters—especially when analyzing content for performance. Across these tests, Anthropic’s golden rule to “be clear, direct, and detailed” consistently delivers the best results.

Here’s an example of a prompt that I’ve refined over time for content performance analysis:

Question: Our blog post received 5,000 views in the first week, with a 3.2% click-through rate to our product page. If our typical conversion rate from product page visits is 8%, how many new customers did this post generate?

Path 1: Views: 5,000 → CTR: 3.2% → Product page visits: 5,000 × 0.032 = 160 → Conversions: 160 × 0.08 = 12.8 ≈ 13 customers

Path 2: Click-throughs: 5,000 × 3.2% = 160 visitors → Conversions: 160 × 8% = 12.8 ≈ 13 customers

Path 3: Product visits: 5,000 × 0.032 = 160 → New customers: 160 × 0.08 = 12.8 ≈ 13 customers

Consistent answer across all paths: 13 new customers

This prompt combines chain-of-thought reasoning with few-shot learning to guide the model through multiple logical steps. The result is consistent, actionable insights rather than generic observations.

Designing this effective prompt has required systematic testing and refinement to make it effective.

My key takeaway from this process? Effective prompts aren’t something you just stumble upon. You have to put in the work: crafting, testing, and refining until you land on the ones that consistently get results.

Clear instructions and a little structure go a long way. When you get this right, you don’t just get better outputs; you set yourself (and your team) up for reliable AI performance, every single time.

Scaling Prompt Engineering Across AI Providers

When you start getting into prompt engineering, you quickly see it’s not a copy-paste job across every AI out there. Each model has its own “personality.” What works for one can flop with another. That’s the catch: Every provider has built their models a bit differently, so the tricks and best practices don’t always line up. If you want to get reliable results at scale, you have to know how to play to each model’s strengths.

- GPT models from OpenAI excel with conversational prompting and system messages that establish context. They respond well to role-playing scenarios and benefit from explicit instructions about reasoning processes.

Find out more from the official OpenAI prompting best practices.

- GPT models from OpenAI excel with conversational prompting and system messages that establish context. They respond well to role-playing scenarios and benefit from explicit instructions about reasoning processes.

- Claude from Anthropic benefits from XML-structured inputs and thinking tags. Claude performs better when you ask it to think step-by-step within <thinking> tags before providing final answers.

Find out more from the official Anthropic prompting best practices.

- Claude from Anthropic benefits from XML-structured inputs and thinking tags. Claude performs better when you ask it to think step-by-step within <thinking> tags before providing final answers.

- Amazon Nova models leverage built-in templates most effectively and are optimized for AWS infrastructure. Nova Micro excels with concise instructions, while Nova Pro handles complex reasoning with detailed context.

Find out more from the official Amazon Nova prompting best practices.

- Amazon Nova models leverage built-in templates most effectively and are optimized for AWS infrastructure. Nova Micro excels with concise instructions, while Nova Pro handles complex reasoning with detailed context.

- Llama from Meta responds well to role-based instructions and benefits from explicit persona definitions. These models perform particularly well when given clear examples of expected behavior patterns.

Find out more from the official Llama prompting best practices.

- Llama from Meta responds well to role-based instructions and benefits from explicit persona definitions. These models perform particularly well when given clear examples of expected behavior patterns.

Even variants within the same family (like GPT-5 versus GPT-4 Turbo, or Claude Opus 4 versus Claude 3.5 Sonnet) can exhibit different behaviors that affect prompt effectiveness.

Looking at the linked best practices, you’ll notice that the different model families mostly share the same fundamental principles: explicit role definition, comprehensive context provision, clear output specifications, and systematic validation processes that ensure consistent performance across diverse use cases.

But when working at enterprise scale, understanding the differences is the only way to maximize performance across your AI toolkit.

3 Steps to Advance Your Prompt Mastery

Let’s face it: AI is already changing the workforce. The risk isn’t that the technology itself will replace you; it’s that someone who knows how to use it will. If you want to stay relevant, you need to lean in, learn the tools, and figure out how to make GenAI work for you, not against you.

Ready to advance your prompt mastery? Start here:

1. Track Agentic Prompting Capabilities

These systems represent the next evolution of AI interaction, where well-engineered prompts become the foundation for intelligent agents that can reason through workflows, use tools dynamically, and handle multi-step processes with minimal human intervention.

For inspiration, check out OpenAI’s Practical Guide to Building Agents.

2. Implement Structured Prompt Engineering & Infrastructure

Effective communication with GenAI starts with structured prompts, but doesn’t stop there. The infrastructure that serves your prompt requests can help unlock GenAI’s full potential with your chosen trade-off for speed, accuracy, and cost.

See AWS Prompt Engineering 101: A Beginner’s Guide.

3. Leverage Retrieval-Augmented Generation (RAG)

If you’re hitting a wall with prompting alone, RAG (retrieval-augmented generation) takes your AI output to the next level. By combining your carefully crafted prompts with relevant document retrieval, you ground GenAI responses in real business context, current data, and proprietary knowledge bases.

See an example implementation in our Beginner’s Guide to Amazon Q: Why, How, and Why Not.

–-

Your investment in prompt engineering isn’t just about immediate gains; it’s building a long-term advantage as GenAI evolves.